Cursor AI Cost Analysis: Evaluating the Value for Developers

Cursor AI Cost Analysis: Evaluating the Value for Developers

Meta Description: Cursor AI Cost Analysis: Evaluating the Value for Developers – A comprehensive look at Cursor AI’s pricing, features, and ROI. Discover if this AI code assistant is worth its cost for individual developers and teams, with 7 essential insights on value, alternatives, and real-world examples.

Outline

Introduction – Briefly introduce Cursor AI and the importance of evaluating its cost and value for developers.

Understanding Cursor AI – Explain what Cursor AI is and how it fits into a developer’s workflow.

- What is Cursor AI? – Define Cursor AI as an AI-powered code editor/assistant and its core functionality.

- Key Features of Cursor AI – Highlight features like code completion, AI agents, and multi-file context that provide value.

Why Cursor AI Cost Analysis Matters – Discuss why developers and teams should analyze the cost vs. benefits before investing in Cursor AI.

Cursor AI Pricing Overview – Provide an overview of Cursor AI’s pricing tiers and subscription plans.

- Hobby (Free) Plan – Describe the free tier limits (requests, trial period, etc.).

- Pro Plan – Detail the Pro plan cost and limits (e.g. $20/month for 500 “fast” requests, unlimited completions).

- Ultra Plan – Explain the Ultra plan ($200/month) with expanded usage (20× the Pro limits).

- Team & Enterprise Plans – Outline team pricing ($40/user/month) and enterprise options.

Breaking Down Cursor’s Pricing Structure – Explain how Cursor AI’s request-based pricing works and what “fast” vs “slow” requests mean.

- Fast vs. Slow Requests – Clarify the difference between fast (premium) requests and slow (rate-limited) requests.

- Normal Mode vs. Max Mode – Describe Cursor’s two modes and how Max mode uses a token-based cost (API cost + 20% for heavy tasks).

Comparing Cursor AI to Alternatives – Compare Cursor’s cost and features with other AI coding assistants.

- Cursor vs. GitHub Copilot – Note Copilot’s pricing (~$10/month) vs Cursor, and differences in capabilities.

- Cursor vs. Other Tools – Compare with tools like Tabnine or Replit’s AI, highlighting cost and feature differences.

Benefits and Value for Developers – Explore the benefits Cursor AI provides to justify its cost.

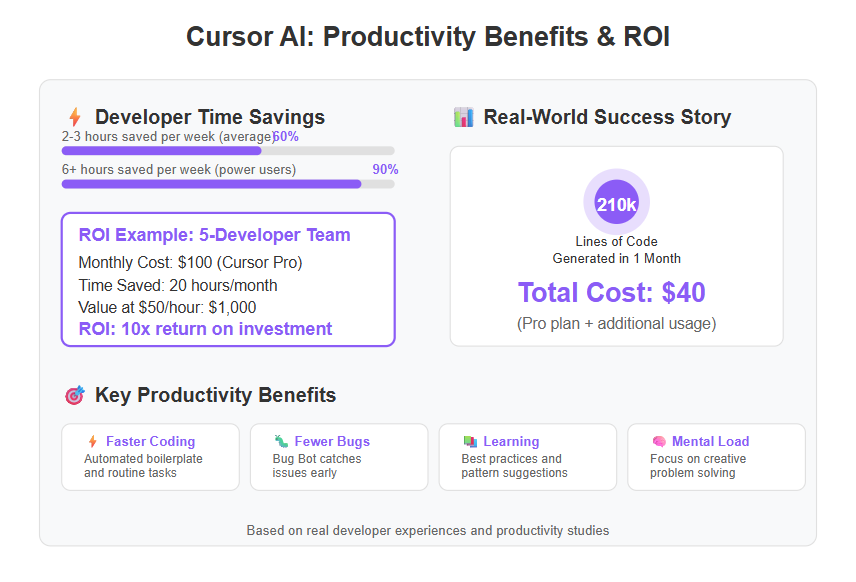

- Productivity & Time Savings – Discuss how Cursor can speed up coding (e.g. saving 2–3 hours per week on average).

- Code Quality and Efficiency – Explain how smart suggestions and debugging (Bug Bot, etc.) improve code quality and reduce errors.

Real-World ROI of Cursor AI – Provide examples or case studies illustrating Cursor’s value.

- Case Study: 210k Lines for $40 – Cite a developer who generated 210,000 lines of code in one month for only $40 using Cursor.

- Developer Experiences – Mention feedback from users about productivity gains vs. subscription cost (e.g. heavy users hitting limits).

Is Cursor AI Worth the Cost for Individuals? – Analyze whether solo developers get sufficient value from the $20/month Pro plan.

- Value for Hobbyists/Freelancers – Consider use cases for individuals and when the Pro plan pays off (or if free is enough).

- When to Upgrade to Ultra – Discuss scenarios where a single developer might need the Ultra plan’s capacity.

Is Cursor AI Worth the Cost for Teams? – Evaluate Cursor’s value for teams and organizations.

- Small Teams vs. Business Plan – Weigh the $40/user Business plan against alternatives (e.g. Copilot Business) for team budgets.

- Enterprise Considerations – Discuss larger-scale cost (e.g. 500 devs cost comparison) and enterprise features like SSO and admin controls.

Tips to Maximize Value from Cursor AI – Offer strategies to get the most out of Cursor while managing costs.

- Optimize Request Usage – Tips like using Cursor’s “slow” mode for minor tasks to conserve fast requests, or leveraging the free trial effectively.

- Integrate Cursor into Workflow – Encourage training and consistent use so developers fully utilize the tool (avoiding wasted subscriptions).

Potential Drawbacks and Considerations – Address limitations or concerns that impact Cursor’s cost-value equation.

- Handling Usage Limits – Cover the risk of hitting request limits and how Cursor handles overage (throttling or extra fees).

- Learning Curve & Tooling – Note any learning curve or integration effort that might reduce immediate value.

- Pricing Sustainability – Briefly touch on how VC-subsidized pricing keeps costs low now and the possibility of changes in the future.

Future Outlook for Cursor AI Pricing – Discuss how Cursor’s pricing strategy might evolve (market competition, VC funding effects, etc.) and what that means for long-term value.

Frequently Asked Questions (FAQs) – Answer common questions about Cursor AI’s cost, usage, and value (at least 6 Q&As).

Conclusion – Summarize the findings, reaffirm whether Cursor AI offers good value for its cost, and end on an optimistic note for developers considering it.

Introduction

Imagine having an AI pair programmer that can autocomplete code, fix bugs, and even refactor your project, all within your editor. That’s the promise of Cursor AI, an AI-powered code editor assistant that’s gaining traction among developers. Cursor AI operates in the rapidly evolving ai coding assistant space, which is a highly competitive space featuring several major players. Cursor's speed of code suggestions is significantly faster, with latency of 50-100ms compared to competitors' 200-500ms, making it a standout choice for developers seeking efficiency. In this introduction to our Cursor AI cost analysis, we’ll outline why evaluating its value for developers is so important. At the end of the day, no matter how impressive a tool is, it needs to deliver enough benefits to justify its price tag. This article will delve into Cursor AI’s pricing structure and features, compare it to alternatives, and help you determine if it offers value for your money as a developer. We’ll combine expert insights, real-world examples, and up-to-date data to assess whether Cursor AI is worth the cost for you or your team.

Understanding Cursor AI

Before jumping into dollars and cents, it’s crucial to understand what Cursor AI actually is and how it fits into a developer’s workflow. Cursor AI is often described as an “AI code editor” or coding assistant that integrates into your development environment. In simple terms, it’s like having an intelligent co-pilot for coding. Cursor stands out for its speed, simplicity, and developer-centric workflows, making it especially appealing for solo developers and small teams who want quick adoption and fast response times.

What is Cursor AI? It’s a tool that uses advanced language models (like OpenAI GPT-4 or Anthropic Claude) to assist with writing and modifying code. Unlike basic autocomplete, Cursor AI can scan your entire codebase and adapt to your coding style. It understands context across multiple files and can generate whole blocks of code or even entire functions based on natural language prompts. For example, you can ask Cursor to “implement a login form with validation” and it will attempt to produce the code for you. It’s able to make smart suggestions because it’s trained on vast amounts of programming knowledge and can consider the architecture of your project, not just the last few lines of code.

Cursor streamlines development workflows by automating and organizing coding processes, integrating AI features like Background Agent, BugBot, memories, and MCP to help maintain coding momentum and improve team collaboration. The BugBot feature, in particular, provides automated code reviews, helping developers identify and fix issues more efficiently.

Key Features of Cursor AI: Several standout features make Cursor AI attractive to developers:

- Intelligent Code Completion: Cursor provides on-the-fly suggestions and auto-completions (like an advanced version of tab-autocomplete, dubbed “Cursor Tab”) for code as you type. It has a memory of your project context, so suggestions are often more relevant than a generic autocomplete.

- AI Agent for Complex Tasks: Cursor’s Agent mode can handle multi-step tasks autonomously. For instance, it can refactor code across multiple files, generate unit tests, or even run shell commands to help set up your environment. This agent acts like a junior developer who can execute instructions, which is a game-changer for repetitive tasks.

- Bug Detection and Fixing: The tool includes features like Bug Bot (available in the Pro plan) that can detect potential issues and suggest fixes. This can save debugging time and improve code quality.

- Large Context Windows: Cursor supports “maximum context windows” in higher tiers, meaning it can take into account large chunks of your code at once. This is useful in big projects where understanding the whole picture is necessary for relevant suggestions.

- Integration and Language Support: It works with popular programming languages (Python, JavaScript, C#, Java, etc.) and integrates into IDEs/editors so it feels native to your workflow. Cursor’s integration feels natural within popular IDEs, providing unobtrusive assistance that enhances the user experience. By allowing developers to stay within their preferred editor, Cursor reduces context switching and helps maintain focus. While it may not cover every obscure language, it focuses on those most widely used in industry, offering depth and reliable support in those domains.

- Memories for Persistent Context: Cursor’s Memories feature allows the AI to retain context across sessions, enabling it to provide more consistent and relevant suggestions over time.

In short, Cursor AI offers a blend of code intelligence and automation, enabling developers to write code more efficiently and automate tasks. Developers with experience using Cursor often report that it helps streamline their work by handling boilerplate code, providing instant answers or snippets, and even learning their coding patterns over time. With that understanding, we can now appreciate why many are excited about Cursor – and why it’s important to analyze its cost against these capabilities.

Why Cursor AI Cost Analysis Matters

New tools are exciting, but developers and engineering managers know that every tool has to earn its keep. Performing a cost analysis for Cursor AI means looking beyond the hype and asking practical questions: How much will this actually cost me or my team? What value do we get in return? This section explores why analyzing the cost of Cursor AI is a necessary step before adopting it.

Budget-Conscious Development: In software projects (whether personal or enterprise), budget constraints are real. An AI assistant might boost productivity, but if it eats up too much of your budget, it could be counterproductive. With the increasing costs of advanced AI tools and models, careful cost analysis becomes even more important to ensure you’re not overspending. By evaluating Cursor AI’s pricing and comparing it to the expected productivity gains, developers can determine if it’s a cost-effective choice. For example, if a freelance developer is considering the Pro plan at $20 per month, they’ll want to be sure that Cursor saves them at least $20 worth of time or improves $20 worth of code quality each month – essentially giving a good bang for the buck. For a team considering dozens of licenses, the stakes are even higher.

Understanding True Value: The value of an AI tool isn’t just in direct features, but in how it changes your workflow. A thorough cost analysis forces you to think about those workflow changes. Will Cursor AI allow developers to complete features faster? If so, projects might go to market sooner, which has its own monetary value. Studies have documented significant improvements in developer productivity with AI assistants – such as 20–30% faster feature delivery in some cases. Developers using Cursor have reported productivity increases of 300-500%, showcasing its potential to transform coding efficiency. Translating that into business value can justify the tool’s cost, but it requires careful analysis. We’ll later cite data on how much coding time Cursor might save and how that weighs against its subscription fee.

Avoiding Surprises: Another reason cost analysis matters is to avoid hidden costs or surprises. Some AI tools have usage-based pricing or limitations that can lead to unexpected overage fees. By scrutinizing Cursor’s pricing model (requests, modes, etc.), you can anticipate how its cost scales with heavy use. The last thing you want is to adopt a tool thinking it costs a flat $20, only to find your usage pushes you into a higher pricing tier or incurs extra charges. As we’ll see, Cursor AI’s pricing has nuances (like “fast” vs “slow” requests and an optional ultra tier) that are important to understand up front.

In summary, doing a Cursor AI cost analysis is about due diligence. It’s making sure that the excitement around AI helpers translates into real-world value that outweighs the expense. Price competition among AI coding tools also shapes both pricing strategies and the features offered, so it’s important to consider how Cursor AI compares in this evolving market. With the “why” established, let’s move into the specifics of how much Cursor AI costs and what you get at each price point.

Cursor AI Pricing Overview

Cursor AI offers a tiered pricing model to cater to everyone from solo hobbyists to large enterprise teams. Understanding these tiers is key to evaluating the cost. In this section, we’ll break down the official pricing plans – what they cost and what they include – so you can see where you might fit. The plans we’ll cover are the Hobby (Free) plan, the Pro version, the Ultra plan, and the Team/Enterprise plans.

Hobby (Free) Plan: For those who want to dip their toes in the water, Cursor AI provides a free tier called Hobby. As a free user, you get a taste of what the AI can do without opening your wallet. The Hobby plan includes a two-week trial of Pro features (so you can experience the full power of Cursor initially). After the trial, the free tier limits you to 50 requests per month (these would be “slow” requests) and about 2,000 AI completions. In practice, this is enough for light usage – perhaps experimenting on small projects or using Cursor sparingly for assistance here and there. It’s ideal for students, hobbyists, or anyone who just wants to test Cursor’s capabilities without commitment. Of course, free means there are constraints: heavy coding sessions will quickly exhaust 50 requests, after which you’ll have to wait until the next month or upgrade. For some users, the free tier or other tools may represent a cheaper option depending on their needs. But as a zero-cost entry point, it lowers the barrier for anyone curious about Cursor.

Pro Version (Individual): The Pro version is Cursor’s standard offering for individual developers and is priced at $20 per month on a monthly basis (or about $16/month if billed annually with a 20% discount). This is the plan most solo developers or freelancers will consider, so let’s detail what $20/month buys:

- 500 “fast” requests per month: These are premium, high-priority requests using the top-tier models (like GPT-4 or Claude 3.7) with quick responses. Essentially, you can ask Cursor for help 500 times in a month at full speed. Each prompt you give to Cursor’s chat or agent counts as one request. For context, if you code regularly (say a few hours a day), 500 requests can actually go by faster than you think – one developer noted that if you code more than an hour a day, “you will rip through your premium requests”.

- Unlimited “slow” requests: After those 500 fast requests are used up, you aren’t entirely cut off. You have unlimited slow requests, which means you can still use Cursor’s AI but likely at a throttled rate or using slower models. The service might make you wait longer between requests or switch to a less expensive model when you exceed your fast quota. The key point is you won’t be forced to stop coding – you just can’t continue at the same high speed or quality of responses until the next cycle.

- Unlimited completions: Cursor can keep suggesting code completions as you type, without a hard limit. Those inline suggestions (similar to GitHub Copilot’s behavior) don’t count against your 500 fast request quota, which is great. You won’t run out of auto-complete style help.

- Full feature access: The Pro version unlocks all features like Background Agents (Cursor working on tasks in the background), the Bug Bot for debugging, and maximum context windows for larger code analysis. In short, Pro users get the complete Cursor AI experience, just with the monthly request cap to mind.

For many individual developers, $20 a month for these capabilities can be seen as a bargain if it saves significant time. However, as noted, heavy users might find 500 fast requests limiting – we’ll discuss overages and usage patterns shortly in the cost analysis.

Ultra Plan (Individual Power-User): Next comes the Ultra plan at $200 per month. This is a much higher price point aimed at power-users or perhaps very active freelance developers who live and breathe in code (or simply, those who kept hitting the Pro plan limits and need more). What do you get for 10× the cost of Pro? Essentially, more of everything:

- ~10,000 fast requests per month: The Ultra plan increases the monthly fast request quota by 20× compared to Pro. That translates to roughly ten thousand high-priority requests, effectively removing the concern of “running out” for all but the most extreme users.

- Priority and newest models: Ultra gives priority access to new features and often the very latest, most powerful AI models as they become available. You won’t be stuck with older versions; when Cursor integrates a cutting-edge model (say a new GPT or Claude upgrade), Ultra users typically get it first.

- Uninterrupted agent use: If you rely on Cursor’s AI agent for large refactors or project-wide changes, Ultra ensures it can work continuously without hitting a quota wall. This is useful for enterprise-scale projects or very complex codebases.

- In summary, Ultra is about capacity and speed. It’s for developers who found the Pro plan wasn’t enough – perhaps they were paying for extra requests or sitting idle when hitting limits. At $200/month, it’s a significant investment, so we will later evaluate what kind of developer or team would find this plan worth it. It’s also worth noting that compared to some other AI services or the raw cost of using an API directly, $200 for essentially unlimited high-end coding assistance might still be cost-effective if it replaces a lot of manual effort. We’ll compare alternatives in the next section to put this in perspective.

Team and Enterprise Plans: Beyond individual plans, Cursor AI offers Team and Enterprise options for organizations:

- Team Plan: Priced at $40 per user per month (when billed annually, $50 if month-to-month), the Team plan is basically the Pro plan features for each user, plus team management capabilities. Teams get everything in Pro (500 fast requests each, etc.), and additionally:

- Organization-wide Privacy Mode: This ensures that code from your organization isn’t sent to training or is handled with enhanced privacy. Companies care about this if they’re inserting proprietary code into an AI tool.

- Admin Dashboard & Usage Stats: Managers can see how the team is using Cursor, monitor request usage, and manage licenses centrally.

- Centralized Billing: Instead of individual developers expensing $20, the company gets one bill for all users – simpler for accounting.

- Single Sign-On (SSO) integration: For enterprise authentication (SAML/OIDC), so employees can use their company login to access Cursor.

- MCP server integration: Integration with an MCP server can help organizations optimize API development and reduce costs by caching API specifications locally, minimizing token usage, and improving cost-efficiency when using AI coding tools.

At $40/user, this is double the cost of an individual Pro, which covers the added overhead of those management features and presumably the expectation of heavier usage per seat in a team environment.

- Enterprise Plan: For large organizations, there is a custom-priced Enterprise tier. This typically includes everything in Team, and then some extras like:

- Higher usage limits or custom model access: They might bundle more than 500 requests per user or allow some negotiation on capacity (the “more usage included” note suggests big enterprises can get higher quotas).

- Advanced security and support: Features like SCIM (automated user provisioning), more granular access controls, priority support and a dedicated account manager come into play.

- MCP server integration: Enterprise plans can also leverage MCP server integration for further API optimization and cost reduction at scale.

- The pricing for Enterprise isn’t public; it’s “Contact Sales” for a tailored quote. Large companies often negotiate a rate based on number of seats and specific needs.

For context, if a company had 10 developers on Cursor’s Team plan, that’s about $400/month or $4,800/year (with annual billing) for those 10 seats. Enterprise deals might lower the per-seat cost for larger volumes, or include the Ultra-level usage for certain power users. We have an example from a study: a 500-developer team on Cursor Business (Team) tier would pay about $192k per year at list prices.

That’s a useful number to keep in mind for scale, and we’ll compare it to competitor costs later.

Cursor maintains a consistent pricing strategy focused on core features for developers, making it easier to predict costs and evaluate value across different tiers. The release of Cursor 1.0 brought significant performance enhancements and user experience improvements, further solidifying its position as a leading AI coding assistant.

Now that we’ve outlined what each plan offers, you should have a clear picture of Cursor AI’s pricing landscape – from free to $200/month, and individual vs. team features. Next, we’ll dive deeper into how the pricing works under the hood, which will illuminate how those request limits function and why they exist.

Breaking Down Cursor’s Pricing Structure

On the surface, Cursor AI’s pricing might seem straightforward: pay monthly, get AI assistance with some limits. However, there are a couple of key concepts to understand in the pricing structure: the idea of “requests” (fast vs. slow) and the two operating modes, Normal vs. Max mode. Grasping these will help you avoid confusion and make the most cost-effective use of Cursor.

Fast vs. Slow Requests: Cursor’s plans revolve around requests. A “request” is basically any prompt or query you send to the Cursor AI (like asking a question in the chat or invoking the agent to do something). In the Pro plan, you get 500 fast requests per month. These fast requests use the premium, high-speed models (like OpenAI GPT-4 or Anthropic Claude) and return answers quickly. Once you’ve used up those, you can still make requests, but they become slow requests – which are unlimited but are rate-limited or possibly use a smaller model.

Think of it like an all-you-can-eat buffet where you can only fill your plate 500 times with the premium dishes; after that, you can still eat, but only from the simpler salad bar and only after waiting a bit between plates. Why the distinction? It’s because the fast requests consume more resources. Each fast request to a big model like GPT-4 costs the company money (roughly $0.04 per request in raw API costs by one estimate). If Cursor let everyone have unlimited fast requests for $20, they’d likely lose money on heavy users. The unlimited slow requests ensure you’re not completely stuck once you hit 500 – you can continue coding with AI help, just not as fluidly. This system represents a balanced approach to providing high-quality assistance while managing costs, offering developers flexibility without being too restrictive or too lenient. This structure is also a gentle nudge: if you consistently find yourself chafing at the slow mode, you might need to upgrade to Ultra or find ways to optimize your usage.

From a cost perspective for the user, the good news is no surprise charges for using slow requests – you won’t be billed extra for exceeding 500 in Pro, you’ll just experience the throttling. In fact, some users treat the 500 fast requests as a challenge to manage: use fast requests when it really counts (complex tasks), and rely on the slower, simpler completions for minor suggestions to stretch their quota. Effective management of your requests can help reduce overall development time, as you can prioritize faster responses for critical tasks and use slower requests for less urgent needs.

It’s worth noting how quickly fast requests can disappear. One developer reported, “I have used my 500 + paid for 604 extra requests… I paid $44.16 this month”. This indicates they blew past the 500 included and ended up paying roughly another $24 for additional usage. (Likely by buying an add-on or using a pay-as-you-go model for the extra requests.) It underscores that 500 requests, in a heavy coding month, might not be as much as it sounds. If you send a flurry of requests in an intense debugging session or while building a feature (each message you send counts as one), those add up. Fast tip: Try to formulate questions or prompts that tackle more per request, rather than doing many small incremental queries – this can conserve your quota.

Normal Mode vs. Max Mode: Cursor AI has two operational modes which can affect how requests are counted and billed: Normal mode and Max mode. These modes relate to the underlying AI model usage:

- Normal Mode is the default for regular tasks. In normal mode, each user message typically counts as one request (for the chosen model). Cursor optimizes things so that a back-and-forth conversation doesn’t eat multiple requests unless it’s truly heavy. Normal mode uses the included models and respects your fast/slow quota.

- Max Mode is an optional mode designed for high-complexity tasks. When you activate Max mode, Cursor will use more powerful models or multiple model calls to answer a single query. Instead of counting by the single prompt, it effectively counts usage by tokens (the chunks of text processed). In practice, Max mode usage is billed differently – it’s “token-based pricing” where Cursor passes on the cost of the model API usage to you, plus ~20% overhead. This only kicks in if you enable Max mode or run out of normal requests.

In simpler terms, Max mode is like going off the preset menu and ordering à la carte. You’re tapping directly into big models without the normal limits, but you’ll pay per volume of usage. For instance, if you needed Cursor to analyze an entire large codebase or generate a very complex piece of code, Max mode might use thousands of tokens from a model like GPT-4 and charge something like a few cents per thousand tokens for the privilege. Cursor adds ~20% on top of the raw cost (for providing the service/integration).

This is where heavy-duty usage can get expensive if not managed. A complex operation in Max mode might count as dozens or even hundreds of the equivalent “requests” in one go. The benefit is that you can do extremely advanced tasks that would otherwise exceed your normal mode limits. The downside is it introduces a variable cost – you might see usage charges if you rely on Max mode frequently. The Talentelgia analysis noted that Max mode’s flexibility can cause “quick exhaustion of request quotas” if misused, so it’s best reserved for when you truly need that extra power.

To summarize the modes and requests: Under normal usage (Normal mode, under your request cap), Cursor’s cost is fixed – you know what you pay per month. If you engage Max mode or consistently blow past your fast request quota, you enter a territory of diminishing returns where either the service slows down or you pay more. So part of cost-effective use of Cursor is managing these modes: use Normal for day-to-day coding, and only invoke Max (which might cost extra) when the task justifies it (like a one-click refactor of a massive project, if such a thing is even possible).

Understanding these mechanics helps in cost analysis: it’s not just how much you pay for the subscription, but how to utilize that subscription wisely. In the next sections, we’ll evaluate Cursor’s cost in context – comparing it with other tools and examining if the value it brings justifies these costs for different kinds of users.

Comparing Cursor AI to Alternatives

No cost analysis is complete without comparing the options on the table. Cursor AI isn’t the only AI coding assistant in town – other popular tools include GitHub Copilot, Tabnine, Replit’s Ghostwriter, Windsurf, and even general AI like OpenAI’s ChatGPT (with code plugins). These tools all operate within the broader assistant space, each offering unique features and approaches to AI-powered coding. When evaluating these options, it's important to consider the speed difference between Cursor and other tools, as faster response times can significantly improve user experience and efficiency. Additionally, performance on open source projects is often used as a benchmark to assess how well these assistants handle real-world coding challenges. How does Cursor stack up in terms of cost and value against these alternatives? Choosing the right tool for your specific development needs and budget is crucial to maximizing productivity and cost-effectiveness.

Cursor vs. GitHub Copilot

GitHub Copilot is perhaps the most well-known AI pair programmer. It was one of the first to popularize AI code completions in VS Code and other editors. In terms of pricing, Copilot is cheaper for individual users: $10 per month for Copilot (for personal use). GitHub has also introduced a Copilot for Business at $19 per user/month, which includes some additional policy controls, and a new Copilot “Pro+” tier (limited rollout) around $39 that includes a certain number of GPT-4 requests for code explanations. Even at the higher end, Copilot is well below Cursor’s Ultra $200 tier. Claude Code's Pro plan, priced at $17 per month, also offers a more affordable option for light users compared to Cursor Pro.

So purely on subscription price:

- Copilot individual: $10/month.

- Copilot Business: $19/month per user.

- Copilot (higher tier with GPT-4): ~$39/month per user.

- Cursor Pro: $20/month per user.

- Cursor Team: $40/month per user.

- Cursor Ultra: $200/month.

Copilot has an edge on cost for the basic offering, but there’s more to the story. Feature comparison: Copilot mainly provides inline code suggestions and some code completion, drawing context from the file you’re editing and maybe neighboring files. Cursor, on the other hand, offers an interactive chat agent, multi-file refactoring, and features like web search integration on the Ultra plan. Cursor is more of an integrated development AI, whereas Copilot feels more like an intelligent autocomplete plus a lightweight chat if you use Copilot Chat (still, Copilot’s chat is stateless per prompt and not as deeply integrated with file system as Cursor’s agent).

One way to frame it: Copilot excels at inline suggestions and is simple to use, while Cursor offers a broader toolkit (agents, debugging, search) that can potentially handle bigger tasks. But if you just need inline code completion, Copilot might give you 80% of the benefit at half the price of Cursor Pro.

Additionally, Copilot usage is unmetered in the sense that Microsoft doesn’t publicly limit how many suggestions you get. However, Copilot does have hidden rate limits (for example, it won’t fire off more than X requests per minute). There is also the Copilot Pro+ with GPT-4 chat where they explicitly cap you (as per one report, 90 GPT-4 requests/day included), beyond which they might charge per request (they haven’t fully publicized those rates). So Copilot can incur usage costs at the high end as well, but the typical user doesn’t see extra fees beyond the flat subscription.

Verdict (Cursor vs Copilot): If you’re purely cost-sensitive and mainly want autocompletion, Copilot is a budget-friendly choice at $10. Cursor Pro, at $20, asks for more investment but gives you a richer feature set. Many developers might try Copilot first (since it’s cheaper) and move to Cursor if they find Copilot’s scope too limited. On the flip side, a developer who specifically wants Cursor’s agent features or larger context handling might justify the higher cost. It’s also notable that Cursor’s fast evolving; being a newer startup, they iterate quickly and integrate the latest models (Claude, Gemini, etc.), sometimes faster than Copilot can. For a developer eager to stay at the cutting edge of AI coding, Cursor’s slightly higher price might be worth it. Ultimately, the decision between Copilot and Cursor often comes down to personal preferences in workflow and feature set—customizing your AI coding tools to fit your habits and needs can significantly impact productivity and satisfaction.

Cursor vs. Other AI Coding Assistants

There are several other players:

- Tabnine: An AI code completion tool that can run locally or in the cloud, known for a focus on privacy (you can self-host). Tabnine’s pricing for Teams is around $12 per user/month for basic, up to $39/month for enterprise features. It’s comparable to Copilot in cost. Tabnine doesn’t yet have the kind of conversational agent that Cursor has; it’s more focused on completing your current line or block. Companies with strong privacy requirements sometimes choose Tabnine (you can even get an on-premises model). In terms of value, Tabnine might not write whole functions on its own initiative; it’s more a helper than an “AI partner.” So Cursor provides more ambitious assistance (with a corresponding higher cost). When selecting an AI assistant, understanding your specific project requirements is crucial, as different tools may be better suited to different development needs.

- Replit Ghostwriter: A tool integrated into Replit’s online IDE, Ghostwriter is priced around $10-$20 per month for individuals. It offers code completion and a chat, but it’s tied to using Replit’s environment. If you work locally, Ghostwriter isn’t directly applicable. Its strength is that it can spin up instantly with your code on Replit and even come with compute to run the code. But for most professional workflows, Cursor (which plugs into VS Code/JetBrains) might be more relevant.

- Windsurf: A perhaps less-known but powerful competitor, Windsurf is another AI pair-programmer with interesting features (like an AI agent named “Cascade” for multi-step tasks). Windsurf had a Pro plan around $15/month and a higher plan around $30/month, but from the startup discussion we saw, they had experimented with a $60 ultimate plan and removed it. Windsurf’s pricing model was reportedly flat with no usage metering, which can be attractive. Feature-wise, Windsurf also tries to provide an agentic experience, arguably with a nicer UI as some users say. However, Cursor’s integration of web search and some model variety might give it an edge in capabilities for complex tasks and makes it more suitable for complex projects that require advanced planning and problem-solving. For cost, though, Windsurf at $15 is far cheaper than Cursor Ultra and a bit cheaper than Cursor Pro.

- OpenAI ChatGPT & Others: Some developers simply use ChatGPT (particularly ChatGPT Plus at $20/month) for coding help. They’ll copy code into ChatGPT and ask for fixes or write code from prompts. ChatGPT Plus with GPT-4 is $20 and effectively unlimited chat (with some daily message cap). While not an IDE integration, it’s a viable alternative for those on a tight budget – albeit much less convenient than an in-IDE assistant. Cursor’s advantage is being right there in your editor, applying changes directly to your files. ChatGPT requires manual copy-paste, which can be time-consuming and error-prone for large codebases. Still, it’s worth mentioning because from a pure cost perspective, $20 on ChatGPT offers general AI capabilities beyond coding too.

In comparing all these, a pattern emerges: Cursor AI is not the cheapest option; it sits at the higher end of the price spectrum for individual tools (especially with that Ultra tier). However, it aims to justify the premium with a broader feature set and potentially higher productivity gains.

For instance, one analysis compared the annual cost for 100 developers: Cursor’s business plan was ~$192k/year, whereas GitHub Copilot’s was ~$114k/year for the same number of users, and Tabnine’s was ~$234k. Cursor was mid-range in that scenario, more expensive than Copilot but cheaper than Tabnine enterprise. But cost is only one side; if Cursor yields notably more than Copilot in productivity for those devs, it could be worth the extra investment.

Ultimately, choosing between Cursor and its alternatives comes down to priorities:

- If low cost and basic functionality are paramount, Copilot or Tabnine might suffice.

- If you want the cutting-edge AI capabilities and don’t mind paying a bit more, Cursor delivers more “power tools” for coding.

- Teams with security concerns might weigh Tabnine (for self-hosting) or waiting for self-hosted Cursor options if they ever arise.

- Individual hobbyists might even stick with free tiers or ChatGPT until they feel the need for deeper integration.

Next, we’ll shift from costs to benefits: what do you actually gain by using Cursor AI, and how does that translate into value in practical terms?

Benefits and Value for Developers

Productivity & Time Savings: The primary selling point of any coding assistant is that it can save developers time. Cursor AI can accelerate many aspects of coding:

- Writing boilerplate: Instead of spending 30 minutes writing out routine code (say a data class, or CRUD functions, or setting up a repetitive test), you can prompt Cursor and get it done in a minute. Over days and weeks, these small savings add up. Early data from organizations using AI coding tools show developers saving on average 2-3 hours per week of coding time due to the AI assistance. Top power-users even save 6+ hours per week in some cases. This is time they can reinvest into more complex tasks or polishing the code rather than drudgery.

- Faster feature completion: By handling the easier parts of coding, Cursor lets developers focus on the tricky logic or unique business problems. It’s like having an assistant write the first draft of code, which you then refine. This can shrink development timelines. Anecdotes from developers often mention, “I finished in a day what would have taken me two or three without the AI.” That kind of acceleration can be crucial for hitting deadlines.

- Context-aware suggestions: Unlike a generic snippet tool, Cursor’s suggestions consider your project’s context. That means less time spent modifying suggestions to fit your code – they often work out-of-the-box. It can autocomplete entire functions that compile on the first try, which feels like magic when it happens.

- Support for developers working with large codebases and complex debugging tasks: Cursor is designed to assist developers working on intricate projects, offering real-time interaction and workflow efficiency that help manage and debug large codebases more effectively. Additionally, Cursor provides real-time error identification, making debugging more user-friendly compared to other tools like Devin AI.

Productivity & Time Savings: The primary selling point of any coding assistant is that it can save developers time. Cursor AI can accelerate many aspects of coding:

- Writing boilerplate: Instead of spending 30 minutes writing out routine code (say a data class, or CRUD functions, or setting up a repetitive test), you can prompt Cursor and get it done in a minute. Over days and weeks, these small savings add up. Early data from organizations using AI coding tools show developers saving on average 2-3 hours per week of coding time due to the AI assistance. Top power-users even save 6+ hours per week in some cases. This is time they can reinvest into more complex tasks or polishing the code rather than drudgery.

- Faster feature completion: By handling the easier parts of coding, Cursor lets developers focus on the tricky logic or unique business problems. It’s like having an assistant write the first draft of code, which you then refine. This can shrink development timelines. Anecdotes from developers often mention, “I finished in a day what would have taken me two or three without the AI.” That kind of acceleration can be crucial for hitting deadlines.

- Context-aware suggestions: Unlike a generic snippet tool, Cursor’s suggestions consider your project’s context. That means less time spent modifying suggestions to fit your code – they often work out-of-the-box. It can autocomplete entire functions that compile on the first try, which feels like magic when it happens.

- Support for developers working with large codebases and complex debugging tasks: Cursor is designed to assist developers working on intricate projects, offering real-time interaction and workflow efficiency that help manage and debug large codebases more effectively.

Time saved is money saved (or earned) in development. If a freelancer can take on an extra project per month thanks to Cursor, the $20 subscription pays for itself many times over. If a team can ship a feature even one week sooner, the business value can be immense (think of beating a competitor to market or closing deals with new capabilities).

Code Quality and Error Reduction: Beyond speed, a good AI assistant can also improve the quality of the output:

- Fewer bugs: Cursor’s Bug Bot and intelligent suggestions can catch mistakes or suggest edge-case handling that a developer might miss. It’s like having a second pair of eyes reviewing your code in real-time. For instance, if you write a function and forget to handle a null input, Cursor might suggest that check. Early catch of bugs means less time debugging later.

- Best practices and learning: Cursor has been trained on a large corpus of code, including best practices and common patterns. It can suggest more efficient or cleaner ways to implement something. Over time, using Cursor can actually level-up a developer’s skills – you see better patterns and adopt them. For a junior developer, this guidance is extremely valuable (almost like a mentor sitting with them and gently nudging them towards better code).

- Consistency: In a team setting, using the same AI assistant can lead to more consistent code style across developers. Cursor might format or structure code in a certain consistent way, which reduces the “it works but everyone writes it differently” syndrome. Consistency can improve maintainability, which is a long-term value.

- Adapts to individual coding styles: Cursor can tailor its suggestions and workflows to individual coding styles, enhancing productivity and customization for each developer.

Reduced Mental Load: There’s also a less tangible but important benefit: Cursor can reduce the mental fatigue of coding. Routine tasks and looking up syntax/documentation can be draining. When Cursor handles those, developers can preserve their mental energy for creative and complex problem-solving. This often leads to a more positive developer experience – coding becomes more about design and logic, less about fighting the language or Googling stuff. It’s not uncommon to hear developers say that using an AI assistant makes coding more enjoyable again, because they can focus on the “fun” parts.

Collaboration and Knowledge Sharing: In a team, an AI like Cursor ensures that knowledge is not siloed. For example, if only one person knows how to set up the CI pipeline script, others can ask Cursor (assuming the relevant code is in the repository) to help modify or extend it without bothering that expert. The AI has ingested that context. It’s like each developer has at their disposal the sum knowledge of the team’s codebase and beyond. This flattens the learning curve when jumping into a new codebase or area of the code.

All these benefits contribute to Cursor AI’s value proposition. However, it’s important to be realistic: Cursor doesn’t replace developer skill or eliminate all work. You still have to review AI-generated code, ensure it meets your requirements, and integrate it properly. Think of Cursor as a highly skilled assistant – it can draft a lot, but you’re the senior developer who must supervise and finalize the output. When used wisely, the synergy can significantly boost productivity and quality. When used carelessly, one might waste time wrestling with AI suggestions that aren’t quite right. Therefore, part of extracting value is learning how to communicate with the AI effectively (prompt engineering, giving the right context, etc.).

Next, let’s look at some real-world examples and ROI calculations to ground these benefits in actual results and numbers. This will help illustrate scenarios of Cursor AI paying off (or not) in monetary terms.

Real-World ROI of Cursor AI

To truly evaluate Cursor AI’s cost-effectiveness, let’s consider some real-world usage examples and data. ROI (Return on Investment) can be anecdotal (stories from individual devs) or quantitative (metrics from teams), and user feedback plays a crucial role in refining Cursor's features and performance based on these real experiences. We have a bit of both.

Additionally, Cursor's seamless integration into development environments helps minimize workflow disruptions, allowing developers to maintain momentum and productivity compared to less integrated tools.

Case Study: 210k Lines of Code for $40

One compelling example comes from a developer who used Cursor extensively over a month. According to his report, “Cursor coded 210,000 lines of code for me in May. The cost? $40.”. Let’s unpack that:

- He was on the Pro plan ($20) and ended up paying an extra $20 for additional requests that month, totaling $40 spent.

- In return, he got 210k lines of code generated. Even if many of those lines were boilerplate or needed tweaking, the sheer volume is impressive for the cost. As the developer put it, “Only $40. I can’t fathom how valuable this is.”.

- He mentioned that this output was actually higher than the previous month, even though he spent less money than before (he trimmed his spending from ~$100 down to $40 while getting more done). This implies he learned to use Cursor more efficiently to get better results for less cost – a sign of mastering the tool.

This case study highlights a few things:

Massive Productivity Potential: 210,000 lines in a month is something a whole team might produce, not just one developer. If those lines were genuinely useful code, Cursor effectively amplified this person’s productivity by an order of magnitude. The value of that in normal consulting rates or salary terms would be huge (imagine paying a developer to write 210k lines – it’d be far above $40!).

Efficiency Gains: The fact he reduced cost while increasing output suggests a learning curve payoff. Early on, you might over-use the AI or use it less effectively (thus buying extra credits). Over time, you learn how to instruct it better and integrate it smarter into your workflow, yielding more results for the same or lower cost.

ROI in Dollar Terms: $40 leading to what might be weeks or months worth of coding – that’s an ROI any business would take in a heartbeat. Even if we assume only a fraction of those lines were kept, the cost per line of code (a crude metric, but still) was negligible.

It’s an optimistic example, of course, perhaps an outlier scenario. Not everyone will have that experience. But it demonstrates the upper bound of what’s possible.

Developer Experiences & Feedback

Another experienced Cursor user, Chris (the same one from the Medium posts), shared honest feedback that is useful for cost analysis. In his “Is it worth it?” breakdown, he noted that 500 fast requests were not a lot for him – coding just an hour a day could exhaust it. He ended up using around 1,104 requests (500 included + 604 extra) in one month, costing him about $44 total.

From his perspective:

- Value Achieved: Despite needing extra, he clearly found it worthwhile enough to pay those additional fees. He wouldn’t do that if the tool wasn’t giving him value in return. His write-ups suggest he felt more efficient and “rarely frustrated in a day” using Cursor – indicating a positive experience despite the cost.

- Cost Control: He managed to cut down his spending in subsequent months by being smarter with usage (as the 210k lines example in May showed). This indicates that initial months might be exploratory (and possibly costlier if you go all-out), but once you calibrate, the cost stabilizes or even drops.

- Comparing to Alternatives: He and others in the AI coding community often compare cost to using raw API keys (like directly calling OpenAI’s API). If he didn’t have Cursor, he might have used ChatGPT’s API or similar, which could charge per 1,000 tokens. By one analysis, Cursor’s $20 plan covers about $2.40 worth of raw API calls if fully used, which sounds like an incredible deal. However, that analysis also notes Cursor likely subsidizes a lot thanks to venture funding – meaning right now users are getting more value than they pay for, by design. This is a classic startup strategy: capture market share with low prices now, worry about profits later.

Team ROI: Let’s consider a team scenario. Suppose a team of 5 developers all use Cursor Pro ($20 each, $100/month). If each of those devs saves even 4 hours a month thanks to Cursor (that’s maybe 1 hour a week), and if their fully burdened hourly rate is, say, $50 (a rough estimate for a software engineer when you consider salary, benefits, etc.), then the team saves 20 hours * $50 = $1,000 of productivity value for a $100 cost. That’s a 10x ROI in pure time saved. The reality might be more nuanced, but it shows how quickly the subscription cost can be justified in a professional context.

Now, if the team instead went for Copilot at $10 each, $50/month total – their ROI might be similar in magnitude; Copilot could save time as well. But if Cursor saved an extra hour or two over what Copilot could (due to more capabilities or less switching to external tools), that gap could tilt favor in Cursor’s direction despite higher cost.

ROI isn’t only time. It could be better outcomes (fewer bugs = less cost of fixing later, which can be very high). Or employee satisfaction – developers who get to use modern tools might be happier and stay longer at a company, reducing turnover costs.

To be balanced, we should also acknowledge when ROI might not materialize:

- If a developer is very junior and doesn’t know enough to vet AI outputs, they could waste time or introduce bugs by blindly trusting Cursor. In such cases, the tool might even cost time (fixing AI mistakes) until the developer’s skill grows. Mentorship alongside AI is important.

- If a project is very small or in a niche language Cursor isn’t great at, the value might be limited. Paying for Cursor to help with, say, a one-off 100-line script in an obscure language might not be worth it.

- Some developers have a personal coding style and might find an AI assistant distracting or not aligned with how they think. For them, even free might not be worth the “mental cost” if it doesn’t mesh with their workflow. It’s not common, but it happens.

However, for the majority who embrace the tool, the consensus from many real users is that Cursor AI does provide significant value. The combination of anecdotal success stories and emerging productivity research suggests a net positive impact.

Now, armed with understanding of costs and benefits, let’s directly address the question: Is Cursor AI worth it? We’ll consider different types of users – individuals and teams – in the next sections, since “worth it” can vary depending on scale and needs.

Is Cursor AI Worth the Cost for Individuals?

For individual developers – whether you’re a solo freelancer, a student, or just a programming enthusiast – deciding if Cursor AI is worth $20 a month (or $200 for Ultra) comes down to personal usage and budget. Let’s break it down by scenarios:

Hobbyists and Students (Free vs. Pro): If you’re a student or coding as a hobby, the free Hobby plan might actually suffice, at least initially. You get limited completions and requests, but also a 2-week Pro trial to see what you’re missing. Many casual users can stick to the free tier if they code infrequently or are just exploring. For them, the value proposition is straightforward – any help they get is a bonus since they’re not paying. However, if you find yourself bumping against the free limits often and really benefiting from Cursor during the trial, that’s a sign the Pro plan is worth considering. $20 a month could be steep for a student, but think of it this way: if Cursor helps you finish assignments faster or build a side project that you can showcase, it might pay off in better grades or career opportunities. You could also subscribe just during intense project months and cancel when not needed (since monthly is flexible, albeit a bit more expensive than annual).

Freelancers and Indie Developers: If you’re a freelance developer, time literally equals money for you. You likely charge clients per project or hour. Cursor Pro can be a force-multiplier for your work – enabling you to take on more projects or deliver faster (which could justify higher fees or more client satisfaction). For a freelancer, $20 is often less than one billable hour of work. So if Cursor saves you even an hour a month, it’s broken even; if it saves you 5 or 10 hours, it’s a big net gain. Freelancers also wear many hats (front-end, back-end, DevOps, etc.), and Cursor can act as a quick knowledge base when you’re working outside your primary expertise. For example, if you mainly do Python but take a contract requiring some JavaScript, Cursor can help bridge that gap with suggestions and prevent you from getting stuck on syntax or common patterns. In optimistic terms, for independent developers, Cursor AI often pays for itself in productivity.

One caveat: As an individual, you have to ensure you actually utilize the tool. It’s easy to subscribe and then forget to really integrate Cursor into your routine. That’s akin to joining a gym and not going – a pure loss. So, individuals considering Pro should plan to involve Cursor in daily coding: use it for code reviews (“Cursor, find potential issues in this code”), for writing docs (“Cursor, draft a docstring for this function”), etc. The more you use it (up to the limit), the more value you get for the fixed cost.

Power Users (Considering Ultra): Now, what about that $200/month Ultra plan – could it be worth it for a solo developer? For most, probably not. Ultra is targeted at extreme power users. Think of someone building a complex application single-handedly and essentially coding full-time with heavy AI assistance. If you find that every month you exhaust Pro’s limits and still crave more (and are perhaps already paying extra usage fees nearing or exceeding $100), that’s when Ultra might make sense. With Ultra, you’re effectively saying: “I want no holds barred, full throttle AI help.” It could make sense if, for example, you’re a contractor handling multiple projects concurrently – you might use Cursor to generate large portions of each project quickly. Or if you’re in a hackathon or startup sprint mode trying to build a prototype in record time, a month of Ultra could accelerate development significantly.

However, for a typical individual developer, $200/month is a lot (that’s $2,400/year, which could buy a high-end laptop or a lot of cloud services). You’d need to be sure that investment is recouped either financially or through massive time savings that you value. The general advice is: start with Pro; if you consistently hit its ceiling and it’s slowing you down, upgrade to Ultra for the heavy months.

Quality of Life and Learning: Worth mentioning is that individuals also derive qualitative value from tools like Cursor. It can make coding feel more supported and less frustrating, almost like working with a buddy. If you’re a lone developer (freelancer or one-person startup), having Cursor is like having an ever-present assistant to bounce ideas off or get unstuck. That moral support factor is hard to price, but it contributes to whether something feels “worth it.” Many developers are optimistic about AI tools because they reduce the loneliness of solo coding – you have something to interact with. If that keeps you motivated and happy, it’s worth some cost.

Alternatives for Individuals: Individuals should also weigh alternatives (like Copilot at half the price, or using ChatGPT). If Copilot meets your needs, you might opt for the cheaper route. But if during your trials you find Cursor’s suggestions are meaningfully better or the features much richer, then paying extra is rational. Some developers do subscribe to multiple services (e.g., both Copilot and Cursor) to combine strengths, but that’s for those really deep in the AI coding realm.

In conclusion for individuals: Cursor AI Pro is generally worth the cost if you are coding regularly and can leverage its features to save time or improve your code. The free tier is great for occasional use or trying before buying. Ultra is only worth it for an elite few who max out the Pro plan constantly. Always consider your own workflow and budget – if you’re only coding a couple of hours a month, you might not need a paid plan at all. But if you’re coding daily, Cursor can quickly become an invaluable part of your toolkit, justifying its monthly fee through tangible productivity boosts and intangible improvements in your development experience.

Is Cursor AI Worth the Cost for Teams?

For teams and organizations, the calculation of worth becomes a bit more complex (but also potentially more rewarding). Teams usually look at tools like Cursor AI in terms of how it impacts overall velocity, code quality, and developer happiness, weighed against potentially thousands of dollars per year in subscription fees. Let’s analyze.

Small Teams / Startups: Imagine a small startup with 5 developers. If they all get on Cursor’s Team plan, that’s about $40 * 5 = $200 per month, or $2,000/year (if paid annually). In startup budgeting, $2k is not huge – one decent laptop or a fraction of a developer’s salary. If Cursor helps those 5 devs deliver even one additional feature or avoid a major bug in a year, it likely pays for itself. Small teams benefit because they often have to move fast with limited manpower; Cursor acts like an extra team member who can pick up slack by handling grunt work or providing expertise on demand.

There’s also a competitive edge aspect: if your startup can build features faster than a rival because your devs are turbocharged by AI, that’s potentially worth far more than the cost of the tool. In optimistic terms, providing Cursor to your dev team can be viewed as investing in developer productivity infrastructure, similar to giving them good hardware or monitors – it’s a relatively small cost for a boost in output.

Large Teams / Enterprises: For a big company, say with 100 developers, at $40 each, that’s $4,000/month or $48,000/year (list price). Enterprises often negotiate better deals, but let’s use list prices for fairness. $48k/year for 100 devs is actually not bad in enterprise software terms (companies pay much more for other developer tools or licenses). If those 100 devs each save a couple hours a month, the company easily recoups that in productivity. In fact, one analysis pegged GitHub Copilot at ~$114k/year for 500 devs, Cursor at ~$192k/year for 500 devs. So at scale, Cursor was costlier per seat than Copilot by that estimate. A company might ask: why pay $192k instead of $114k? The answer would have to be because the additional value from Cursor (features, time saved, etc.) is more than the difference (i.e., more than $78k of value). For an enterprise, $78k might be the salary of one junior developer – if Cursor can make the existing 500 devs as productive as having 510 devs (2% improvement), it’s worth it. Put that way, just a small few-percent efficiency gain across the board can justify the higher expense.

Team Collaboration and Quality: Teams also care about consistency and collaboration. Cursor’s Business plan features like admin dashboards and org-wide settings can enforce best practices (like Privacy Mode ensuring none of the proprietary code gets leaked). That’s a value for risk management. Having all team members using the same AI means they can share usage tips, and less experienced devs can rely on it when seniors are busy – potentially reducing bottlenecks where junior devs are waiting for help. It doesn’t replace mentorship, but it supplements it. MCP developers can also leverage Cursor's platform and its Model Context Protocol enhancements to integrate their tools, enabling one-click installation, OAuth support, and easy distribution within the ecosystem. This fosters collaboration and streamlines workflows for teams adopting Cursor.

Training and Adoption: There is some overhead to consider: rolling out Cursor to a team means you should train them on how to use it effectively (the E in E-E-A-T: Experience – the team needs to gain experience with it). If some devs don’t use it much, then their licenses are wasted money (like unused gym memberships that the company is paying for). The Planet Fitness analogy from the pricing strategy article noted that many enterprise licenses might go underused. So companies should monitor usage via the admin dashboard – if only 30% of licenses are active, maybe they over-provisioned. Or they might need to do internal advocacy or training sessions to increase adoption. The best ROI comes when a large percentage of the team actively uses the tool regularly. According to research, top organizations see 60-70% weekly usage of AI tools among developers; if you can get to that level, your ROI will be solid. If only 10% use it weekly, then it’s likely not worth it yet.

Copilot Business vs Cursor Team: Many companies will compare these two. Copilot Business at $19 vs Cursor at $40 (per user). Copilot might feel like the safer, budget choice, especially if the company is already a Microsoft/GitHub customer. Cursor has to prove its added features justify doubling the cost. One angle is that a company might empower certain teams or leads with Cursor Ultra ($200 for a power user) and give others Copilot – a hybrid approach. In fact, Apidog’s analysis mentioned that for the price of 5 business seats ($40 * 5 = $200), a team could alternatively get one Ultra seat. If only one developer in that team needs heavy usage (say a principal engineer doing massive refactors), they might do that and give others Pro.

It really depends on the workload: If the team often works on large-scale code changes, or has periods of crunch where they’d benefit from unlimited AI support, investing in a few Ultra licenses could be gold. If their work is more steady and not AI-intensive, Pro or Business seats are fine.

Enterprise Support & Security: Another factor for “worth it” at enterprise level is support and security. Cursor being a newer company, enterprises might be cautious – but they do offer enterprise agreements with presumably stronger support. If a bug in Cursor halts work, business customers will want fast help. Also, things like SSO integration (included in Business plan) and data privacy commitments are valuable for companies. They might pay more for Cursor if it means, for example, that all code stays in a certain region or is not used to train models (Copilot had controversies about training on user code; Cursor offers a Privacy Mode to alleviate that).

Developer Retention: This is rarely in the spreadsheets, but giving developers cutting-edge tools can be a perk. If your company is one of the early adopters of AI coding assistants, developers might feel they’re in a progressive environment and are more likely to stay or be attracted to join. Conversely, if your competitors are using AI and you aren’t, you might lose talent to them. It’s a bit of an arms race in tech – and relatively speaking, the cost of these tools is minor compared to the cost of losing good engineers. So from an HR perspective, one could argue it’s “worth it” to provide such tools just to keep the team happy and competitive.

In conclusion for teams: Cursor AI tends to be worth the cost when integrated properly into the team’s workflow and when the team actually leverages its advanced features. The larger the team, the more a small productivity gain will outweigh the subscription fees. However, organizations must manage adoption to avoid paying for unused licenses. They should also continuously evaluate if the team is benefiting enough to perhaps adjust the mix of tools (some might even run a pilot comparing Copilot and Cursor across different sub-teams to measure impact). As of 2025, many companies are still experimenting with these assistants – but those who have embraced them are generally seeing positive returns. Cursor’s value for teams is real, especially for those who push its capabilities, but it requires that the team is ready and willing to integrate AI into their daily development practice.

Tips to Maximize Value from Cursor AI

Whether you’re an individual or part of a team, maximizing the value of Cursor AI means getting the most assistance and time savings out of it for the least cost. Here are some tips and best practices to ensure you’re leveraging Cursor effectively and cost-efficiently:

- Learn the Tool and Features: First, invest time in learning what Cursor can do. It sounds obvious, but many users only use a fraction of the features. Explore the documentation and try out things like Background Agents, Bug Bot, or multi-file search if available. The more of Cursor’s capabilities you tap into, the more you’re squeezing value out of that monthly fee. It’s like having a Swiss Army knife – it’s only useful if you know all the attachments and when to use them.

- Optimize Your Requests: Since the Pro plan has a 500 fast request cap, use them wisely. Instead of rapid-firing questions or prompts, take a moment to formulate clear, detailed prompts that might achieve more in one go. For example, rather than asking in five separate requests “Now write function X… Now function Y…”, you could prompt Cursor with a higher-level request: “Generate functions X and Y to accomplish Z, using best practices.” This way, one request may yield multiple pieces of code. Also, prefer batching tasks: if you have several small things, ask them in one prompt if possible (the AI will usually handle multi-part prompts).

- Use Slow Mode Strategically: After your fast requests are used, slow requests are unlimited but might be throttled. If you hit your limit mid-month, adjust your workflow to the slower pace rather than immediately buying more requests. For instance, you might save non-urgent AI questions for the next morning or do some manual coding in the meantime. Slow mode might still answer you, just with a delay or reduced speed. If you can tolerate that for a while, you avoid extra costs. Also, consider switching to a personal API key for some tasks if Cursor allows (some features might let you use your own OpenAI key for extra calls, charging your OpenAI account, which could be cheaper for sporadic extra usage).

- Leverage the Free Trial and Referral Programs: If you haven’t started yet, make full use of the 2-week Pro trial in the free plan. Time it wisely: start it when you have a lot of coding to do (e.g., when a project is in full swing) so you can really see the benefit. Additionally, check if Cursor has any referral bonuses or student discounts – some services provide free months for referring others or cheaper rates for academia.

- Stay Updated on New Features: Cursor is evolving rapidly. New features might further justify its cost by adding value. For example, they might introduce an improved debugging agent or support for a new language. By staying updated (follow their blog or changelog), you can incorporate new features into your workflow early. Sometimes a new feature can drastically improve your experience (imagine if they add a feature to automatically generate project documentation – that could save hours).

- Combine Tools for Best Effect: If you find one tool doesn’t cover all bases, don’t be afraid to use a combination. For instance, some developers use both Cursor and ChatGPT. They use Cursor for code generation in-editor and ChatGPT for higher-level design discussions or explanations. ChatGPT Plus is $20 – if you already have it, use it to offload some questions that you might not want to count against Cursor’s quota (like “Explain this error message” or “What’s the complexity of this algorithm”). Conversely, use Cursor for the things it’s uniquely good at (applying changes to code, indexing your repository, etc.). Using each tool for what it’s best at can maximize overall productivity.

- Regularly Review Usage Stats: If you’re on a Team plan, use the admin dashboard to see usage. If you’re an individual, manually track roughly how many requests you use. If you consistently only use, say, 100 requests out of 500, you might dial back usage or potentially even drop to free for a while if cost is a concern (though free is a big drop). If you’re consistently maxing out on day 10, maybe you truly need Ultra or at least need to alter your prompting habits.

- Encourage Team Knowledge Sharing: For teams, have devs share how they use Cursor. Someone might discover a neat trick (like using the @Files context reference that Cursor has in Ultra to apply changes project-wide). Sharing these tips means everyone benefits and you raise the average ROI per user. Also set some guidelines: e.g., decide when to trust Cursor’s code vs. when to do extra review, to avoid time lost on debugging AI mistakes. A short “AI assistant best practices” internal wiki can go a long way.

- Use Privacy Mode When Needed: If you’re concerned about sensitive code, use the Privacy Mode (especially on team plans). It might slightly affect functionality (since data isn’t used to improve the model), but it ensures you’re comfortable using Cursor on all parts of your code. Being able to use it everywhere (even in sensitive modules) means you’re getting full value across your project.

- Plan for Peak Usage: If you know a crunch time or hackathon is coming, you might temporarily upgrade or stock up on what you need. For example, a team could enable a few Ultra licenses just for a heavy sprint month and then downgrade later. Or an individual could accept that one month they’ll pay extra for more requests. Planning this prevents panic upgrades and also ensures you capitalize on the period of heavy usage (don’t start a critical sprint on day 28 of your quota reset; maybe time it after a reset or coordinate with the billing cycle for max headroom).

By following these tips, you can ensure that every dollar spent on Cursor AI yields as much value as possible. Essentially, treat Cursor not just as a magic box, but as a tool you need to wield skillfully. The more proficient and strategic you are in using it, the more it will feel worth its cost many times over.

Finally, even as you maximize value, periodically re-evaluate: technology and your project needs evolve. Today’s optimal toolset might change in a year. But given the current trajectory, AI coding assistants like Cursor are likely only going to get more capable, which means the value side of the equation should keep growing.

Potential Drawbacks and Considerations

In the spirit of a balanced cost-benefit analysis, we should also address potential drawbacks, limitations, or concerns with Cursor AI that could affect its value proposition. No tool is perfect, and knowing these considerations can help you make an informed decision and mitigate any issues if you adopt Cursor.

Hitting Usage Limits & Throttling: One immediate concern for those on the Pro plan is hitting the 500 fast request limit too soon. As discussed, active coders can burn through those in less than a month. When that happens, you face two choices: endure slow mode or pay extra. Slow mode might disrupt your flow due to waiting times or reduced model quality, which can be frustrating if you’re in the middle of something. On the other hand, paying per extra request (around $0.04 for standard requests as a guideline) can rack up costs quietly if you’re not careful. This introduces a bit of a mental overhead – you may find yourself counting requests or hesitating to use the tool freely because you’re budget-conscious. Some users might feel constrained, which slightly defeats the purpose of a seamless coding assistant.

Mitigation: Plan your usage (as per tips above) and keep an eye on how close you are to the limit. If you regularly hit it, consider budgeting for Ultra in your project (or your mind) during heavy development phases. Also, it’s good to know that Cursor’s team is aware of usage patterns – they’ve set those limits expecting many won’t hit them. If you are the outlier hitting them, you’re exactly the person Ultra is for, or you can split tasks with an alternative like ChatGPT to offload some usage.

Learning Curve and Workflow Integration: While Cursor is designed to integrate into your IDE, there is a bit of a learning curve to using it effectively. At first, it might even slow you down as you formulate prompts or figure out how to instruct the agent. Some developers may get frustrated if Cursor doesn’t do “exactly what I meant” and might say, “I could’ve written that faster myself.” This initial hump can make the tool feel not worth it to some, if they give up too soon. There can be quirks – e.g., maybe the UI shows multiple “Accept” buttons for completions and it’s not clear which one to click (just a hypothetical interface quirk, but indeed some users reported slight clunkiness or confusion in early versions of Cursor’s UI). If a developer has to fight the tool, it temporarily reduces productivity.

Mitigation: Patience and practice. Like using Git or any powerful tool, the first day might be awkward. After a week, you often wonder how you lived without it. Encouraging a team to share prompt strategies can shorten the learning curve. The company is likely refining the interface over time, so usability should improve.

Quality of AI Output: While Cursor leverages top AI models, those models aren’t infallible. They might produce incorrect code, security vulnerabilities, or code that works but is suboptimal. If a developer becomes over-reliant and stops thinking critically, bugs can slip through. There’s a trust factor – some orgs might be uneasy letting an AI write important code, fearing it could introduce an issue that none of the human team fully understands. This isn’t unique to Cursor (Copilot and others have similar issues), but it’s a consideration: you still need thorough testing and code review when using AI-generated code. That’s time that must be spent, which chips away at some of the gross time savings (though net you likely still save time).

Mitigation: Use the AI for what it’s good at, and always review. For critical code, treat Cursor’s suggestion as a first draft that you scrutinize. Over time, you’ll get a feel for when Cursor is likely to be right vs when you should be cautious (e.g., it might be great at suggesting a sorting algorithm, but if it’s a complex multi-threading routine, you might double-check it carefully).

Cost Creep and Pricing Changes: Cursor’s current pricing is, by many accounts, quite aggressive (low) for what it offers – subsidized by venture capital. This raises a question: will these prices hold, or will they increase in the future? As the Startup Spells analysis noted, today’s $20 might be tomorrow’s higher fee once market share is captured. If a user or team builds a workflow deeply around Cursor and then prices rise or free quotas shrink, that could become a drawback. It’s the risk of any cloud service: you don’t fully control the pricing.

At the moment, Cursor is something of a bargain in terms of raw capabilities-per-dollar. But if, say, a year from now they reduce the fast requests or raise Pro to $30, you’d have to re-evaluate. The mention that “only 2-3 tools will survive long-term and then charge actual cost + profit” suggests industry consolidation could change the economics. In short, pricing sustainability is a consideration – what’s a great deal in 2025 might not remain so in 2026, depending on business decisions.

Mitigation: Keep an eye on the market. Have backup plans; for example, if Cursor’s price became untenable, could you switch to Copilot or another competitor? Also, provide feedback to Cursor’s team – if pricing or limits are a pain point, often startups are responsive to user concerns. They might introduce a middle tier or adjust if enough demand is there (the absence of a $60 plan was strategic, but if user segments emerge that need something in between Pro and Ultra, maybe they’ll make something).

Privacy and Compliance: Some companies might worry about sending their code to a third-party AI service. Cursor says you own your generated code and offers privacy modes. Nonetheless, if you’re in a highly regulated industry, legal might have to vet the tool. This isn’t exactly a drawback of Cursor alone – any AI tool has this hurdle. But if you cannot use it on parts of your codebase due to policy, then its value is diminished. Also, while unlikely, any cloud service could have outages. If Cursor is down, developers might be impacted (whereas without it they’d just code normally). Relying on it is a double-edged sword – great when it’s working, a temporary setback if it faces downtime or an API issue.