Debugging AI-Generated Code with Lovable AI: Essential Tips

Debugging AI Generated Code with Lovable AI: Essential Tips (Ultimate Guide)

Debugging AI-Generated Code with Lovable AI: Essential Tips – AI can write code at lightning speed, but when bugs pop up, you need the right strategies to fix them. While Lovable AI streamlines the process, there is still a learning curve involved in mastering AI-assisted debugging, even on no-code platforms. Learn how to troubleshoot AI-generated code confidently using Lovable AI’s tools and best practices. This comprehensive guide covers common issues, expert debugging techniques, and FAQs to help you turn those “AI did something weird” moments into learning opportunities.

Combining traditional debugging methods with AI-specific strategies is key to success. To get the most out of Lovable AI, it’s important to have a basic understanding of coding and prompt engineering, as this foundational knowledge will help you make effective modifications and troubleshoot issues more efficiently.

Outline

Introduction – Overview of AI-generated code and why debugging it with Lovable AI is crucial. Mention the rapid rise of AI coding tools and the need for effective debugging skills in a no-code/low-code platform.

Understanding AI-Generated Code and Its Challenges – Explain what AI-generated code is (e.g. code produced by Lovable AI or similar platforms) and the typical challenges it brings (unexpected errors, unfamiliar patterns, technical debt).

Overview of the Lovable AI Platform – Briefly introduce Lovable AI as a no-code AI app builder. Highlight how Lovable AI generates code based on user prompts (“vibe coding”), and what makes debugging in Lovable unique (AI-assisted fixes, chat mode, etc.).

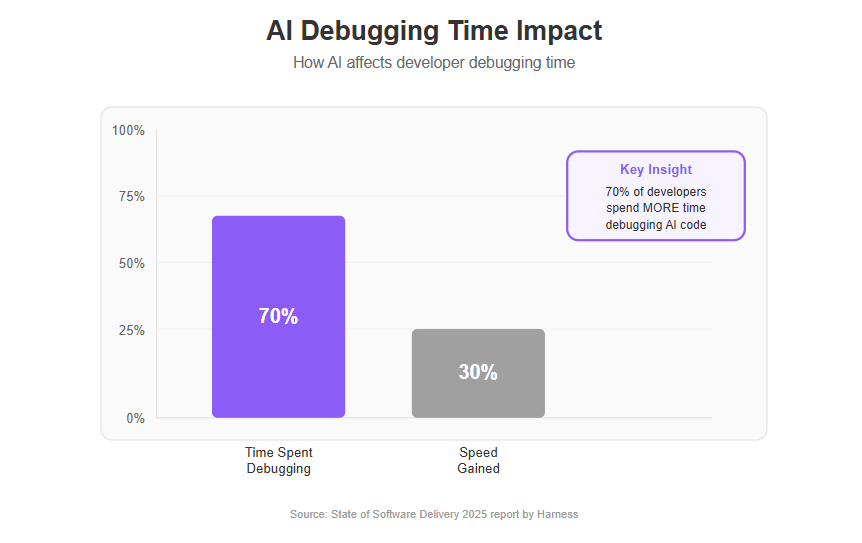

Why Debugging AI-Generated Code with Lovable AI is Important – Discuss the importance of debugging AI-written code. Include statistics or findings (e.g. “70% of developers spend more time debugging AI-generated code”) to illustrate how crucial troubleshooting is for AI-assisted development.

Common Issues in AI-Generated Code – Outline common problem areas: logic errors, UI layout glitches, integration issues (e.g. with backend or APIs), misinterpretation of prompts, and technical debt from code duplication. Emphasize that these issues can occur even when using advanced AI like Lovable.

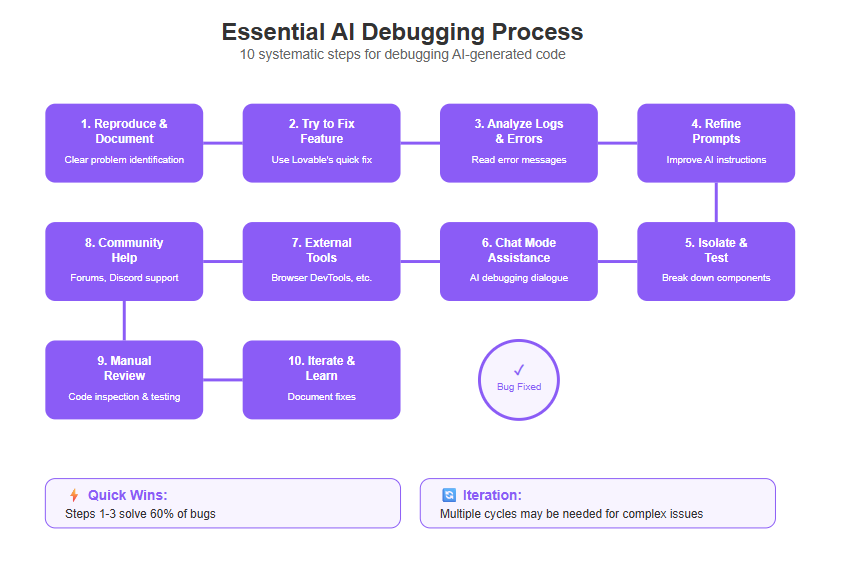

Essential Tip 1: Reproduce and Document the Problem – Stress the first step of debugging: clearly reproduce the bug and note what happened. Encourage documenting steps to recreate issues, error messages, and expected vs actual outcomes. This sets a baseline for troubleshooting (e.g. “Include details like steps to reproduce the problem, error messages, and expected vs actual behavior”).

Essential Tip 2: Use Lovable’s “Try to Fix” Feature First – Explain Lovable’s one-click fix tool. When an error appears, hitting “Try to Fix” prompts the AI to scan logs and automatically attempt a solution. It’s a fast first move to resolve simple issues without manual intervention.

Essential Tip 3: Analyze Logs and Error Messages – Encourage checking Lovable’s logs or any error output thoroughly. AI-generated code may fail silently or throw errors—developers should read these clues. For UI issues, use browser dev tools or Lovable’s visual debug mode to inspect elements. For functional bugs, look at console errors or Lovable’s build logs. Understanding what went wrong is key to guiding the next fix.

Essential Tip 4: Refine Your Prompts and Project Structure – If the AI misinterpreted your intent, the original prompt might need improvement. Advise writing clearer, more structured prompts: describe the feature in detail, specify constraints (libraries, frameworks), and even mention what not to change (guardrails). Also, build features in a logical order (set up pages, then database, then auth, etc.) to prevent complexity later. Proper planning and prompt clarity can prevent many bugs from occurring.

Essential Tip 5: Isolate and Test Components – Debug systematically by isolating the code sections. If a feature isn’t working, break it down: test individual components or functions to pinpoint where the issue lies. For example, if a form submission fails, test just the validation logic alone. This divide-and-conquer approach makes it easier to find errors in AI-generated code, which might be scattered across components.

Essential Tip 6: Utilize Lovable’s Chat Mode for Debugging Assistance – Lovable AI provides a Chat Mode where you can converse with the AI about your app’s state. Highlight using Chat Mode to ask the AI what might be wrong, or to get a summary of recent code changes and attempted fixes. For instance, ask “The screen is blank, what did the last change do?” The AI can often explain what it tried and suggest next steps. This interactive debugging is a unique advantage of Lovable AI.

Essential Tip 7: Leverage External Debugging Tools – Suggest using additional tools alongside Lovable. For UI issues, browser DevTools are invaluable (inspect elements, view console errors). If Lovable’s AI is stuck, developers might copy the code into other AI coding assistants or IDEs (e.g. VS Code with Copilot, or use ChatGPT/Claude) to get a second opinion. External testing frameworks or linters can also catch issues (like syntax errors or unused variables) that the AI missed.

Essential Tip 8: Collaborate and Seek Community Help – Emphasize not going it alone. If a bug is persistent, turn to the Lovable community forums or Discord. Lovable has a growing user community (~500,000 developers) ready to share insights. Explain how sharing your issue (with context, error logs, screenshots) can tap into collective expertise – often someone has faced a similar problem and can offer guidance or workarounds.

Essential Tip 9: Implement Manual Reviews and Testing – Remind readers that AI-generated code still needs human oversight. Adopt robust code review practices: read through the AI’s code to catch obvious mistakes or dangerous logic. Write test cases or at least manually test the feature across different scenarios (different user roles, inputs, browsers). Never assume AI code is perfect – verifying functionality and security is the developer’s responsibility. This also includes checking for hidden dependencies the AI introduced or any code duplication that could cause maintainability issues.

Essential Tip 10: Iterate, Learn, and Document Fixes – Encourage an optimistic mindset: each debugging session is a chance to improve. When the AI fixes a bug after refinement, note what worked. Keep a log of tricky issues and their solutions. Over time, you’ll recognize patterns in AI mistakes (e.g. forgetting edge cases, or using deprecated methods) and anticipate them. Debugging AI-generated code is an iterative process – multiple prompt refinements and small manual tweaks might be needed. With each iteration, developers gain experience and the AI’s outputs improve.

FAQs – Address common questions readers might have about debugging AI-generated code in Lovable (listed in the FAQ section below, such as handling persistent bugs, ensuring code quality, etc.).

Conclusion – Summarize the key takeaways. Reinforce that while AI (like Lovable) accelerates development, strong debugging practices are essential for success. End on an encouraging note about mastering this new skill with experience and the right tools.

Introduction

AI coding platforms like Lovable AI allow creators to build apps without traditional programming – simply by describing features, the AI generates the code. Lovable uses AI to generate applications based on user prompts, making it accessible to non-developers. It’s fast and fun, until something goes wrong. Errors or bizarre behaviors in AI-generated code can and do happen. That’s why Debugging AI-Generated Code with Lovable AI is such an essential skill. In fact, industry reports show that developers often spend significant time troubleshooting AI-written code. According to the State of Software Delivery 2025 report by Harness, 70% of developers now spend more time debugging AI-generated code than they gain in speed. The bottom line: AI can accelerate development, but it doesn’t eliminate bugs.

Debugging in a Lovable AI project requires a mix of traditional problem-solving and new, AI-specific strategies. On one hand, you’ll tackle issues much like any software project – identifying errors, isolating problematic components, and testing fixes. On the other hand, you have an AI assistant at your disposal. Lovable AI’s built-in debugging features (like the “Try to Fix” button and interactive chat) can themselves help diagnose and solve issues. This guide brings you essential tips – from quick fixes to advanced tactics – to efficiently debug AI-generated code on Lovable. By applying these tips, you’ll not only squash the current bug but also learn to collaborate with the AI, turning troubleshooting into a learning opportunity.

Let’s dive into why AI-generated code behaves the way it does, what common issues to watch out for, and how to resolve them step-by-step. Whether you’re a newcomer building your first app with Lovable or an experienced developer integrating AI into your workflow, these tips will help you debug with confidence and keep your project on track. Lovable AI is designed to be accessible to the non technical user, minimizing the need for advanced coding skills and making app development approachable for everyone.

Understanding AI-Generated Code and Its Challenges

AI-generated code refers to source code produced by an artificial intelligence, typically a large language model (LLM) integrated into development tools. In the context of Lovable AI, you describe what you want (for example, “a user login page with email authentication and profile dashboard”), and the platform’s AI writes the underlying code to implement your request. This approach, often called “vibe coding,” lets you focus on what the app should do rather than how to code it.

However, AI-written code comes with its own set of challenges:

- Unfamiliar Patterns: The AI might use frameworks or coding patterns that you, the user, didn’t anticipate. For instance, it may introduce a library or a method you haven’t seen before. The AI may also add new elements to your tech stack, which can complicate debugging if you are not familiar with those technologies. This can make debugging tricky, since you have to understand what the AI did.

- Lack of Context: An AI doesn’t truly understand the project’s bigger picture beyond what you’ve told it. If your prompts were incomplete, the generated code may have gaps or misinterpretations. It could wire things together in a non-optimal way, leading to logical errors.

- Technical Debt and Redundancy: AI tends to be overly verbose and may duplicate code. It doesn’t always follow the “Don’t Repeat Yourself” (DRY) principle. Studies have observed AI coding tools causing an explosion of duplicate code and decreasing maintainability. Over time, this technical debt can spawn bugs or make fixes harder since one change might need to be repeated in multiple places.

- Edge Cases and Error Handling: AI might not anticipate all the edge cases a seasoned developer would. It often generates the “happy path” – code that works for expected inputs – but neglects uncommon scenarios or inputs that break things. For example, the AI might not handle a null value or a network failure unless you explicitly prompted for it. AI-generated code may also struggle with complex logic, especially in multi-step or conditional workflows, which can lead to missed edge cases or faulty error handling.

- Integration Issues: In a platform like Lovable, your app might integrate with external services (databases like Supabase, APIs, authentication providers, etc.). An AI can wire up these integrations, but if misconfigured (e.g. wrong API endpoint or missing database table), you’ll get runtime errors. Lovable AI can act as a coding assistant, but users should be aware of the potential for complex workflows that require careful debugging. Debugging these requires checking both the AI-generated code and the external service settings.

Understanding these challenges is the first step. It sets expectations that debugging is a normal part of the AI development process. As one expert aptly put it, AI can generate functional code quickly “but that’s not what makes software better” – quality still requires human oversight. In the next sections, we’ll explore how Lovable AI works and then move into tips to tackle these issues head-on.

Overview of the Lovable AI Platform

Lovable AI is an AI-powered no-code/low-code platform designed to help users build web applications through natural language prompts. Think of it as having an AI co-pilot for development: you describe features or fixes in plain English, and Lovable generates or updates the code accordingly. Users can describe their app ideas through a chat interface, allowing the AI to transform the descriptions into real applications. Users can focus on building apps and even create internal tools for their organizations without traditional coding. Key aspects of Lovable AI’s development workflow include:

- Prompt-Based Development: You start by telling Lovable what you want to build. This could be high-level (“a task management app with user login and realtime updates”) and then refined into specifics. Lovable handles creating the UI components, database interactions, and so on, guided by these prompts.

- Iterative “Vibe Coding” Process: Building with Lovable is interactive. You add features in phases – e.g., layout first, then functionality, then polish – with the AI implementing each step. Using step-by-step prompting is more effective than assigning multiple tasks simultaneously. The platform encourages a workflow of implementing features, reviewing them, and then refining or adding more. Users can chain multiple prompts together to build more sophisticated features and workflows.

- AI-Assisted Debugging: Recognizing that errors will occur, Lovable includes tools to help fix them. The “Try to Fix” button is one such tool – when your app hits an error or fails to compile, this button engages the AI to analyze the issue and attempt an automatic correction. There’s also a Chat Mode, which allows you to switch from build mode to a chat interface with the AI. In Chat Mode, you can ask the AI questions about your project’s state or request guidance without immediately altering the code.

- Visual Editing and Logs: Lovable provides a visual editor for layout tweaks and an integrated console/log output. If something goes wrong, the log will often show error messages or stack traces similar to what you’d see in a traditional development environment, helping you pinpoint issues. Lovable AI's structured prompting system gives users increasing creative freedom as they progress from training wheels prompting to more advanced techniques.

- Community and Knowledge Base: Because Lovable is relatively new, there’s an active community of makers and a set of documentation with tips. The platform even has a Knowledge File feature – essentially a persistent set of project notes you can maintain to give the AI more context each time it works on your app. This can include your project description, important requirements, or known constraints. Lovable AI also offers a prompt library, allowing users to store and reuse effective prompts across projects.

By combining these elements, Lovable AI aims to streamline development. But importantly, it doesn’t remove you (the developer) from the loop. You still guide the process, review the AI’s output, and intervene when things go awry. In fact, Lovable’s documentation emphasizes that debugging with an AI assistant is a new skill you develop with practice. The upcoming tips will focus on leveraging Lovable’s features alongside traditional debugging know-how to resolve issues effectively.

Why Debugging AI-Generated Code with Lovable AI is Important

It’s tempting to think that once you have an AI writing code, the hard part is over. Yet, experienced users of Lovable (and other AI code tools) will tell you that debugging is still absolutely critical. There are several reasons why investing time in debugging AI-generated code pays off:

- Ensuring Functional Correctness: AI might generate code that runs, but does it do exactly what you intended? Often, small misinterpretations of your prompt can lead to features that partially work or behave incorrectly. Debugging verifies that the app meets the requirements you had in mind.

- Preventing Snowballing Errors: A minor bug, if left unresolved, can cascade into larger problems as you add more features. A mis-configured authentication flow or a flawed database schema could cause multiple features to break. Catching and fixing these early through debugging saves enormous time down the line.

- Learning and Improvement: Each debugging session is an opportunity to learn more about both your application and how the AI thinks. For example, if you notice Lovable consistently makes a certain mistake (say, forgetting to update state after an API call), you can adjust your future prompts or project structure to preempt it. Over time, you get better at prompt engineering and planning, and by working to master prompt engineering, you can create more reliable and debuggable AI-generated code. Essentially, debugging is part of the feedback loop that makes you a better AI-assisted developer.

- Quality and Maintainability: As highlighted earlier, careless use of AI can lead to poor code quality (duplication, technical debt). By debugging and reviewing code, you enforce quality standards. You might refactor the AI’s output – deduplicating code or improving naming – which not only fixes the immediate bug but also makes the codebase easier to maintain and less prone to future bugs. Using the same prompt for similar features helps ensure consistent outputs and reduces unexpected bugs.

- Trust and Confidence: If you’re building something serious (an app for clients or a product for users), you need confidence that the AI-generated code is reliable. Debugging is how you gain that confidence. Knowing that you have tested and fixed the application thoroughly means you can trust it in production. This is crucial because AI can sometimes produce subtle bugs that aren’t immediately obvious without testing (like a security hole or a performance issue). Implementing security measures during debugging is essential to protect user data and app integrity.

Finally, let’s talk scale: when building complex projects, the amount of AI-written code can be substantial. A Harness report noted that while AI speeds up coding, it can decrease delivery stability if not managed, meaning more time spent in debugging and maintenance. In other words, the faster you go, the more important it is to steer carefully. Debugging is the steering mechanism that keeps your high-speed AI development on the road and heading in the right direction.

In summary, debugging AI-generated code with Lovable AI matters because it protects the quality of your project, accelerates your learning, and ensures that all that speedy development actually results in a working, reliable application.

Common Issues in AI-Generated Code

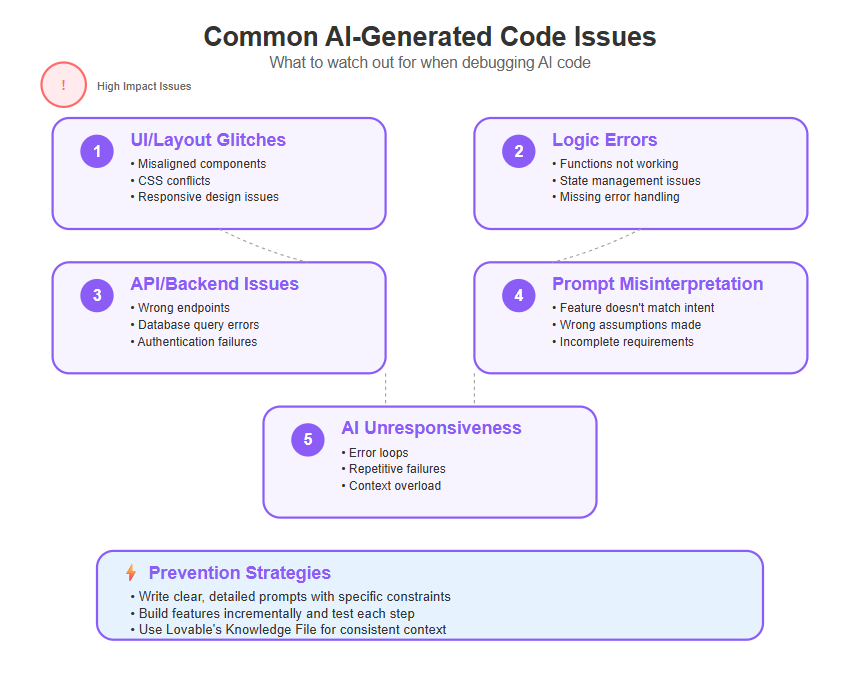

When using Lovable AI or similar AI coding assistants, certain types of issues tend to come up repeatedly. Being aware of these common trouble spots will help you recognize and address them quickly:

UI or Layout Glitches: Perhaps a button isn’t visible, or a form is badly aligned. These visual issues often happen if the AI misinterprets the desired design or if CSS styles conflict. For example, an AI might place a component inside the wrong container, causing a layout break. Lovable offers a visual debug mode to outline component boundaries, which can be very handy in spotting such problems. The solution might be as simple as adjusting a style or moving an element in the visual editor.

Functionality Errors (Logic Bugs): These are cases where clicking a button does nothing, or a feature doesn’t work as intended. They can be due to JavaScript errors, wrong conditions, or missing updates in state. Because AI sometimes overlooks certain logic, you might find that, say, a form doesn’t validate input or a login function doesn’t set the auth token properly. Checking the console logs and Lovable’s logs is crucial here – you might see an error like “TypeError: cannot read property ‘X’ of undefined,” indicating the AI tried to use something that wasn’t there.

API or Backend Integration Issues: If your app connects to an API or a database (like Supabase), issues can arise from misconfigured endpoints, wrong database queries, or missing security rules. For instance, the AI might generate code expecting a certain database table or response format that doesn’t match reality. These issues manifest as error messages in the logs (e.g., 404 errors, database foreign key violations) and often require checking both the AI code and the external service’s configuration. Debugging may also involve verifying the correctness of api calls and ensuring that cloud functions are properly configured to handle event-driven tasks or integrate with external services. The platform allows users to implement features like user authentication and data persistence seamlessly through prompts.

Prompt Misinterpretations: Sometimes the bug isn’t a “code error” per se, but the feature just doesn’t do what you envisioned. This often traces back to the prompt. If you said “implement user profiles” and the AI made some assumption that doesn’t match your needs (like it created usernames but you wanted email addresses as IDs), you have a functional mismatch. The AI technically did something, but it wasn’t what you meant. These situations require refining your instructions and possibly re-generating that part of the app with clearer guidance.

AI Unresponsiveness or Confusion: On occasion, the AI might get stuck in a loop of trying to fix something and failing. Lovable’s docs call these “error loops”. You might hit “Try to Fix” repeatedly only to oscillate between two states, or the AI’s chat replies start to become repetitive without solving the core issue. This can happen if the project state is complex or the AI’s context window is overloaded. The fix might be to take a step back (stop the loop, revert to a stable version) and try a different approach or more detailed prompt after some rest. In a worst-case scenario, you might need to seek a fresh perspective (like asking in the community or using a different AI) if Lovable’s AI gets too tangled. Sometimes, manual intervention is required, such as using the edit code feature to directly address persistent bugs or make necessary changes in the codebase.

By knowing these common issues, you can more quickly categorize a problem when it occurs. Is it primarily a visual bug, a logic bug, an integration bug, or a miscommunication with the AI? Once you categorize, you can apply the appropriate debugging tactics. In the next section, we’ll start covering those tactics – the essential tips to actually solve these problems and prevent new ones.

Essential Tip 1: Reproduce and Document the Problem

The very first step in any debugging journey is to clearly identify what’s wrong. When you encounter a bug in your Lovable AI-generated app, take a moment to reproduce it reliably and document the details:

- Reproduce Step-by-Step: Try to consistently trigger the issue. For instance, if a page crashes when you click “Save”, note exactly how you got there. Does it happen every time you click save, or only after certain inputs? Does it require a specific user account or data state? Consistency is key – it’s hard to fix a bug that you can’t pin down.

- Write Down Observations: Keep notes on what you see. What exactly is the error message (if any)? Which screen or component has the problem? What did you expect to happen versus what happened instead? This might feel tedious, but it’s invaluable. Even if you’re the only developer, writing it out can clarify your thinking. And if you seek help later (from the community or colleagues), having a clear description will save time.

- Check Multiple Environments: If possible, see if the bug appears in different contexts. For example, if you’re testing on a desktop browser, what about mobile? Or if you have staging vs production (for advanced users who exported code), does it happen in both? This can determine if it’s an environment-specific issue.

- Screenshots and Videos: A picture is worth a thousand words. Capture screenshots of error dialogs or misaligned UI elements. Lovable’s interface may allow you to download logs or copy error text – do that as well. If the issue is dynamic (like an animation glitch), a short screen recording can be extremely helpful. In Lovable’s troubleshooting advice, they suggest using images to clarify what went wrong, because it helps both you and any AI assistant (or human helper) to see the problem.

- Minimal Example (if applicable): Sometimes you might reduce the problem to a simpler test. For instance, if a complex page is crashing, you could create a new page in Lovable with just that component to see if the issue still occurs in isolation. This isn’t always easy in no-code environments, but the principle of isolating the bug is useful.

By meticulously documenting the bug, you accomplish two things: (1) you ensure you understand it well enough, and (2) you create a reference that can be used in the subsequent debugging steps. For example, when you use Lovable’s Chat Mode to ask the AI for help, you can describe the problem in detail that you’ve gathered: “After clicking ‘Save’ on the profile page, the app shows a blank screen instead of confirmation. I expected a success message. The console log says TypeError: profileData is undefined.” This specific description will enable the AI (or any human) to give a far more targeted solution than a vague “something is wrong.”

In summary, never skip the reproduce-and-record phase. It’s tempting to dive straight into trying random fixes when you see an error, but taking a disciplined approach at the start saves time overall. Clear documentation is the foundation of effective debugging.

Additionally, keeping track of upcoming tasks related to debugging—such as unresolved issues or scheduled fixes—can help you prioritize your work and automate reminders for tasks approaching their deadlines.

Essential Tip 2: Use Lovable’s “Try to Fix” Feature First

One of the perks of using Lovable AI is that it can sometimes fix its own mistakes. Whenever you hit a snag, your fastest first move is to click the “Try to Fix” button in Lovable’s editor. Here’s how and when to use this feature effectively:

- Immediate Error Handling: Suppose you attempt to run your app (or a specific action in the app) and you get an error message in the logs – perhaps a red error banner or a stack trace indicating something went wrong. Lovable often surfaces a prompt like “An error occurred. Try to Fix?”. By clicking that, you let the AI have a go at solving the issue.

- How It Works: Under the hood, Lovable will scan your project’s logs and recent code changes to identify the issue, then generate a fix for it. When you click "Try to Fix," the AI responds to your prompt by analyzing the issue and generating a tailored solution. For example, if the error was a missing variable or a typo in a function name, the AI might spot that from the error message and correct the code automatically. This is akin to having a pair programmer quickly reviewing your last change.

- Quick Wins: “Try to Fix” is great for simple mistakes or oversights. If the AI accidentally introduced a small bug – such as a syntax error, a forgotten null check, or a minor compatibility issue – it can often patch it within seconds. This saves you from diving into code yourself for trivial fixes.

- When It Fails: Of course, not all issues can be fixed in one click. If the underlying problem is complex or stems from ambiguous requirements, the AI might not succeed immediately. Lovable’s docs note that if the first quick fix doesn’t work, that’s when you’ll need to dig deeper. You might try it once; if the error persists or changes to a different error, avoid spamming “Try to Fix” repeatedly in frustration. At that point, move on to more in-depth debugging steps (which we cover in the coming tips).

- Learn from the Fixes: When “Try to Fix” does solve the issue, take a moment to see what it changed. This is a valuable learning opportunity. Maybe it added a check like if (!profileData) return; to prevent a crash – which teaches you that such a null scenario was possible. Or it corrected a function call, indicating you might have referenced something incorrectly in your prompt. Understanding the fix can help you avoid similar errors later, and it builds trust in using the AI’s automated assistance wisely.

In short, Tip 2 is all about leveraging the AI’s speed. Always attempt the easy fix first – Lovable’s built-in fixer – before you roll up your sleeves for deeper investigation. You’d be surprised how often this simple step gets you unstuck. It’s like hitting the “auto-correct” for code: not foolproof, but very handy for minor glitches.

Essential Tip 3: Analyze Logs and Error Messages

When an AI-generated app misbehaves, it will usually leave clues. Those clues live in logs, error messages, and the app’s runtime behavior. Reading and interpreting these signals is a fundamental debugging skill that remains just as important in Lovable AI projects as in traditional coding.

Here’s how to make the most of the information available:

- Lovable’s Logs: The platform provides a log output (often at the bottom or side of the editor) when you run or preview your app. This is your first stop when something goes wrong. Look for any red error text or warnings. For example, you might see an error like:

Error: Cannot read property 'length' of undefined at line 42 in TodoList.js

This tells you exactly what went wrong and where. In this case, perhaps the AI tried to access someArray.length before initializing someArray. Knowing the error location guides you to which component or function needs attention. - Browser Console: In addition to Lovable’s own logs, it’s useful to open your browser’s developer console (usually by pressing F12 or right-click “Inspect”). The console might show errors, especially for front-end issues (like React errors, network call failures, CORS issues, etc.) that Lovable’s editor might not fully capture. For example, a CORS error (cross-origin resource sharing) could happen if your AI-generated code calls an API that isn’t set up for requests from your domain. The browser console would show that clearly, whereas Lovable might only show a generic network error.

- Network and DevTools: If your bug is related to data (e.g., “the app isn’t saving the form data”), use the Network tab in browser DevTools. It will show if an API request was sent, what the response was, or if it failed altogether. You can catch issues like a 404 endpoint or a 500 server error. This is crucial because the AI might have used the wrong URL or payload format when calling an API.

- UI Debugging Aids: For layout issues, Lovable has a “device preview mode” and possibly a “visual debug mode” that highlight component boundaries. Using these, you can identify if an element is perhaps there but just too small or off-screen due to CSS. Also check if styles are conflicting – sometimes an AI might inadvertently apply a style (like a fixed width or positioning) that breaks the responsive layout.

- Logs for Backend (if applicable): If your Lovable app uses a backend like Supabase (common with Lovable projects), don’t forget to check the backend’s logs. A failure to fetch or save data could be due to a database rule error (e.g., a row insertion was denied by a policy) which might appear in Supabase logs or not at all on the front-end. Lovable’s error might just say “500 Internal Server Error”. In such cases, viewing the backend logs or debug info provides the missing context – perhaps a foreign key violation or an auth permission issue.

- Interpret and Hypothesize: Once you gather error info, interpret it. Ask yourself, “Why would this error occur?” If the error says something is undefined, consider why the AI’s code didn’t define it – was it supposed to come from props or state? If you see a UI glitch, think “Which part of my prompt or code controls this element’s layout?” This analysis often reveals either a flaw in the AI’s logic or an oversight in your instructions. For example, a log might reveal an AI misnamed a variable (thus it’s undefined) – a quick fix is to correct that name in code or prompt the AI to fix it.

Remember, error messages are your friends. They may look cryptic at first, especially if you’re not used to reading them, but they’re essentially the program telling you what it doesn’t like. In the context of Lovable AI, these messages bridge the gap between human intention and AI implementation. By paying close attention to them, you equip yourself to direct the AI on what needs fixing, or to make the fix manually if it’s straightforward.

Essential Tip 4: Refine Your Prompts and Project Structure

Many issues in AI-generated code can be traced back to the instructions given to the AI. If you find yourself repeatedly fixing a certain type of bug, it might be time to step back and refine your prompts or how you structure the project development. Essentially, teach the AI to do it right the next time. Here’s how:

- Be Specific in Prompts: Vague prompts yield unpredictable results. For example, instead of saying “Add a payment feature”, specify “Add a credit card payment form using Stripe API, with fields for card number, expiry, CVV, and handle errors like invalid card or network issues”. The extra details guide the AI and reduce misinterpretation. Also, explicitly mention any library or approach you expect (e.g. “use Tailwind CSS for styling” or “use OAuth for Google login”). This way, the AI won’t guess – it will follow your lead. Learning effective prompting techniques is crucial here, as mastering how you phrase and structure your prompts directly impacts the AI's output and reliability.

- Outline Step-by-Step in Prompts: Lovable’s best practice suggests structuring your prompt as a checklist of what to do. For instance:

Create the UI layout for the profile page.

Implement a form with fields X, Y, Z.

Connect the form to Supabase to save data.

Add validation for required fields.

Show a success message on save.This approach ensures the AI tackles the task in a logical order and is less likely to omit something important.

- Specify What Not to Change: AI can be overzealous and might inadvertently break something while fixing another. You can add guardrail instructions in your prompt: “Do not modify the authentication component” or “Leave existing CSS untouched”. This prevents the AI from messing with code that was already working (and which might not pertain to your current issue).

- Build in a Logical Sequence: Structure your project development to avoid compounding errors. Lovable documentation recommends a logical flow: first set up basic layout, then database connections, then auth, then feature logic, etc., rather than doing everything at once. For example, if you add authentication early (before many features), the AI will integrate it more cleanly rather than slapping it onto a complex app later and possibly breaking things (as some users have experienced).

- Use the Knowledge File: Lovable’s Knowledge File acts as persistent context. Fill it with important details about your app’s domain and requirements so the AI always has that context with every prompt. For instance, note down that “Users have roles Admin and User, and certain pages are role-restricted” or “We use a global state for cart items”. This can prevent bugs where the AI “forgets” these details in later sessions.

- Repeat Key Info in Prompts: Don’t assume the AI remembers everything across prompts. It has a limited memory window. So if a particular constraint is crucial (e.g. “Admin role shouldn’t access X”), mention it again when you move to another part of the app. It might feel repetitive, but it reinforces the correct behavior and avoids context-loss bugs (like the AI hallucinating or forgetting what the app is supposed to do).

- Refactoring via Prompts: If you notice the AI’s code is messy or inefficient, you can explicitly prompt it to refactor. E.g., “Refactor the dashboard code to remove duplication and follow best practices.” There’s even a notion of asking the AI for a codebase audit. While this is advanced, Lovable’s chat could handle requests like checking if code is in the right place or if there’s a cleaner way – essentially using AI’s perspective to improve structure. Just be cautious and review whatever it refactors, as it might inadvertently change behavior.

By refining prompts and how you guide the AI, you prevent many bugs at the source. This tip is as much about preventing future headaches as it is about solving current ones. Every time you clarify something for the AI, you reduce ambiguity. And reducing ambiguity is golden because AI thrives on clarity. Over time, you’ll find that you and the AI develop a sort of “understanding” – you know how to ask it for what you need, and it produces better code that requires less debugging.

For a comprehensive, authoritative guide on crafting effective prompts, mastering prompting techniques, and optimizing your AI workflows, consult the Lovable Prompting Bible—an essential resource for anyone looking to unlock AI's full potential without technical skills.

Essential Tip 5: Isolate and Test Components

When facing a stubborn bug, one of the best classical debugging techniques applies equally to AI-generated projects: isolate the problem and test components individually. AI might generate a lot of code across various files or components, so narrowing down where the issue lies is crucial. When facing persistent errors, isolating a malfunctioning component and testing it separately can identify root causes.

Here’s how to do it in practice:

- Component Isolation: If your app is made of multiple pages or components (which Lovable’s apps are, under the hood, typically React components), try to isolate the one that’s failing. For example, if navigating to the “Reports” page crashes the app, focus on just that page’s component. You can temporarily remove or comment out references to the Reports page in navigation and see if the rest of the app runs. Then slowly add pieces back. This process can reveal that the bug only manifests when X component is active, confirming the culprit.

- Feature Toggle: In Lovable, you might not have code-level toggles, but you can simulate this by instructing the AI to remove or disable certain features to see if a bug still occurs. Alternatively, use a copy of the project (Lovable may allow duplicating an app) and strip it down to the minimal case that shows the error.

- Unit Testing Mindset: While you might not write formal unit tests in a no-code environment, you can apply the mindset. Test smaller parts of the functionality. For instance, if a complex form submission fails, test just the validation function alone (maybe by triggering it via some debug method or temporarily exposing it in the UI). Or test just the API call in isolation (Lovable’s chat mode can be used to invoke certain functions or at least simulate them).

- Break It Down in Chat: Another clever use of Lovable’s Chat Mode is to break the problem. You could ask: “I suspect the error comes from the data formatting before the API call. Can we test that function alone with sample data?” The AI might help by running that function with dummy inputs and showing the result (especially if Lovable allows code execution in preview). Even if it can’t run code in chat, you could ask the AI to simulate what the function would do with given input, essentially mentally executing it.

- Divide and Conquer for UI Glitches: For layout issues, sometimes you can isolate by removing UI elements. For example, if a page layout is broken, remove sections one by one to see when it snaps back to normal. This might involve deleting or hiding components in the editor. Once the offending element is found, focus on its code or style. Perhaps the AI applied a CSS class incorrectly or a container div is missing – details you can fix once you know where to look.

- Check State and Data Flow: Many bugs occur in the interaction between components (one passes data to another, etc.). Isolate the data flow: log or display intermediate values. You might prompt Lovable to temporarily output some values to the console for you (like “add a console.log to show the response data from API”). These “print statements” can confirm if a certain part of code is receiving the right data. If not, you’ve zeroed in on where the data goes bad.

Here’s how to do it in practice:

- Component Isolation: If your app is made of multiple pages or components (which Lovable’s apps are, under the hood, typically React components), try to isolate the one that’s failing. For example, if navigating to the “Reports” page crashes the app, focus on just that page’s component. You can temporarily remove or comment out references to the Reports page in navigation and see if the rest of the app runs. Then slowly add pieces back. This process can reveal that the bug only manifests when X component is active, confirming the culprit.

- Feature Toggle: In Lovable, you might not have code-level toggles, but you can simulate this by instructing the AI to remove or disable certain features to see if a bug still occurs. Alternatively, use a copy of the project (Lovable may allow duplicating an app) and strip it down to the minimal case that shows the error.

- Unit Testing Mindset: While you might not write formal unit tests in a no-code environment, you can apply the mindset. Test smaller parts of the functionality. For instance, if a complex form submission fails, test just the validation function alone (maybe by triggering it via some debug method or temporarily exposing it in the UI). Or test just the API call in isolation (Lovable’s chat mode can be used to invoke certain functions or at least simulate them).

- Break It Down in Chat: Another clever use of Lovable’s Chat Mode is to break the problem. You could ask: “I suspect the error comes from the data formatting before the API call. Can we test that function alone with sample data?” The AI might help by running that function with dummy inputs and showing the result (especially if Lovable allows code execution in preview). Even if it can’t run code in chat, you could ask the AI to simulate what the function would do with given input, essentially mentally executing it.

- Divide and Conquer for UI Glitches: For layout issues, sometimes you can isolate by removing UI elements. For example, if a page layout is broken, remove sections one by one to see when it snaps back to normal. This might involve deleting or hiding components in the editor. Once the offending element is found, focus on its code or style. Perhaps the AI applied a CSS class incorrectly or a container div is missing – details you can fix once you know where to look.

- Check State and Data Flow: Many bugs occur in the interaction between components (one passes data to another, etc.). Isolate the data flow: log or display intermediate values. You might prompt Lovable to temporarily output some values to the console for you (like “add a console.log to show the response data from API”). These “print statements” can confirm if a certain part of code is receiving the right data. If not, you’ve zeroed in on where the data goes bad.

Isolating components and testing incrementally is somewhat counterintuitive in a no-code environment, because you expect everything to “just work together.” But remember, behind the scenes, Lovable is generating code and that code executes just like any app. By mentally switching into a developer’s mode of breaking down the system, you gain clarity on the origin of the bug.

In essence, don’t tackle the whole app at once if you don’t have to. Debug piece by piece. It’s like finding a bad bulb in a string of lights – you test one bulb at a time instead of replacing the whole string. This methodical approach will often pinpoint the issue more quickly than trying random changes to the entire project.

Essential Tip 6: Utilize Lovable’s Chat Mode for Debugging Assistance

One of Lovable AI’s standout features is its interactive Chat Mode. Think of it as having the AI developer who wrote your code sitting next to you, ready to answer questions. When used wisely, Chat Mode can drastically speed up debugging by giving you insights or even solutions without manual code digging. Here’s how to leverage it:

- Ask for Explanations: If something in the code is unclear or a feature isn’t working, simply ask the AI in Chat Mode: “Can you explain how the login function works? It’s not logging users in as expected.” Because the AI knows the code it generated, it can walk through the logic with you. It might respond with an explanation of each step and possibly identify where the logic could fail. This is like instant documentation of the AI-written code, which is super valuable when debugging.

- Seek Diagnostic Help: You can use Chat Mode to diagnose issues. For example: “The page goes blank after clicking ‘Submit’. What could be causing this?” The AI will analyze the state of the project and attempted actions. It might say something like, “It looks like the Submit button triggers a state update that isn’t handled properly, possibly due to X”, guiding you where to look. In Lovable’s docs, they even suggest prompts like “Something’s off. Can you walk me through what’s happening and what you’ve tried?”. This kind of open-ended query can yield a summary of the AI’s recent attempts and missteps. Additionally, Lovable's AI can act as a code reviewer, analyzing your code and suggesting improvements during chat-based debugging sessions.

- Iterative Debugging with AI: Once you have some idea, you can iterate with the AI. “I see. Could it be that the API key is wrong? How do I check that?” The AI might then direct you to where in the settings or code the API key is and how to verify it. Essentially, you’re pair programming with the AI – except the AI also wrote the code, so it has context.

- Prompt for Solutions Carefully: It’s okay to ask the AI directly for a fix in Chat Mode: “How can I fix the form validation issue?” Often it will give you a step-by-step solution or even a code snippet. However, be cautious. Sometimes applying a solution without fully understanding can introduce new issues. It’s wise to follow up an AI-given solution with, “What exactly will that change do?” or review the changes yourself.

- Use Chat for “What-If” Scenarios: If you’re considering a change but aren’t sure, discuss it in chat. “If we switch to a different library for dates to fix this format bug, what would need to change?” The AI can outline the scope, and you can decide if it’s worth it or try a smaller tweak first.

- Getting Unstuck from Loops: If you were in an error loop with “Try to Fix,” switch to Chat Mode and break that cycle by asking “Why do we keep getting this error even after fixes?”. The AI can drop the attempt to immediately fix and instead analyze more deeply or suggest a different approach.

- Plan Debugging Steps: You can even brainstorm with the AI: “Give me 3 possible reasons the email notification isn’t sending, and how to test each.” The answer might list: (1) misconfigured API key – test by verifying API settings; (2) code not calling the email function – test by adding a log in that function; (3) the email service not enabled – test by checking service dashboard. This structured approach saves you from blind guesswork.

It’s important to maintain a clear context when chatting. If the conversation gets long or confused, consider resetting or being very explicit in your questions. For example, reference specific elements: “In PaymentPage component, after clicking pay, nothing happens. What part of the code should handle the click, and do you see any issues there?” The specificity helps the AI pinpoint relevant code.

Ultimately, Chat Mode turns debugging into a collaborative dialogue rather than a solo slog. Many developers find this feature transformative – it’s like the AI is not just a code generator but also a tutor and troubleshooter. Embrace it, ask the “dumb” questions, and you might be surprised at how effectively you and the AI can solve problems together.

Essential Tip 7: Leverage External Debugging Tools

While Lovable AI provides a rich environment, sometimes you need to step outside its box to get a fresh perspective or more powerful debugging. Don’t hesitate to use external tools when necessary:

- Browser Developer Tools: We mentioned this earlier, but it deserves emphasis. Chrome, Firefox, or Edge dev tools can inspect elements, show CSS rules, debug JavaScript, and profile performance. If your AI-generated app is running in the browser, these tools can let you set breakpoints in the code. Yes, even AI code can be debugged line-by-line! For example, if you suspect a function isn’t working, open the Sources tab, find the function (Lovable might allow viewing the transpiled code), and put a breakpoint to see if it’s reached and what the variables are. It’s a bit advanced, but extremely powerful for tricky logic bugs. You can also use browser developer tools to test your AI-generated app as a progressive web app, ensuring it installs smoothly and provides an enhanced user experience similar to a native app.

- Console and Print Statements: If stepping through code is too much, fall back to the trusty debug technique: print stuff out. Using Lovable’s environment, you might add console.log statements via Chat Mode or a manual code edit. For instance: “Add console.log(‘data’, data) at the start of the saveData function.” Then run the app and watch the console logs. This can confirm if a function runs and what data it’s handling, bridging the gap of visibility.

- Integrated Development Environments (IDEs): In some cases, you might export the code from Lovable (if that’s possible or if you use a similar AI coding tool that syncs with GitHub). Opening the project in VS Code or another IDE gives you advanced search, linting, and static analysis. Linters might catch unused variables or mismatched types. An IDE’s autocomplete and error highlighting could reveal issues (like a variable that’s used but never defined will show a squiggly line). Additionally, you could run the app locally if you have the code, which might allow running unit tests or other diagnostic tools.

- Alternative AI Assistants: Sometimes two AIs are better than one. If Lovable’s AI is stuck, you can copy a snippet of problematic code and ask another AI like ChatGPT or GitHub Copilot (through VS Code) for help. Tools like Cursor(an AI pair-programming editor) let you chat with your code and get suggestions. For example, paste the function that’s failing into ChatGPT and ask “What’s wrong with this code? It throws X error.” A different model might explain the issue in a new way or suggest a fix that Lovable didn’t. If your app includes payment features, you may also need to test integration with your stripe account and ensure paid subscriptions are functioning correctly, such as verifying subscription product IDs and payment flows.

- Online Resources and Documentation: Traditional debugging often involves Googling error messages or reading docs. The same applies here. If your Lovable app calls a third-party API and it’s failing, read that API’s documentation to ensure the request format is correct. If an error message is unfamiliar, search the web – chances are someone else encountered it (even if not with Lovable specifically, the underlying tech might be common). Platforms like Stack Overflow or the Lovable community forum can have threads discussing similar problems.

- Profiling and Performance Tools: If the bug is about slowness or memory leaks rather than a clear error, use browser profilers or tools like Lighthouse. They can highlight inefficient code or large bundle sizes. The AI might have done something like loading a huge library for a small task, and a performance analysis could catch that.

- Version Control (Git): If you link Lovable to GitHub (which it appears to support via integration), you gain the ability to track changes. If a new bug appears, you can diff the last working commit with the current one to see what changed. That pinpoint can save debugging time – it might show the exact lines the AI added or modified when the bug was introduced.

Using external tools does two things: it supplements Lovable’s capabilities and it also grounds your debugging in traditional methods. AI or not, at the end of the day, we’re dealing with software that obeys logical rules. Traditional debugging tools are designed to unravel complexity and reveal those rules in action. By combining them with the AI’s help, you get the best of both worlds.

Don’t feel that using external tools is “cheating” or negating the no-code philosophy. On the contrary, it’s being resourceful. The goal is to have a working app – how you get there is by using all the tools at your disposal, AI and non-AI alike.

Essential Tip 8: Collaborate and Seek Community Help

No developer is an island – and that holds true even when an AI is doing some of the coding. One of the strengths of modern development is the community knowledge out there. If you’re stuck debugging an AI-generated code issue, reach out to the Lovable AI community and other developers. Here’s why and how:

- Lovable Community Forums/Discord: Lovable AI, being a popular platform, has an unofficial Reddit (r/lovable) and likely official forums or a Discord channel. These are filled with users who have probably faced similar issues. By posting your problem (with the clear description you documented in Tip 1!), you tap into collective experience. Often, someone might reply, “Oh, I had the same bug last week, here’s how I fixed it.” This can save you hours of trial and error.

- Community Debugging Events: According to one source, Lovable’s community even has events like “Debug-A-Thon” where developers come together to fix issues, boasting a high success rate. Joining such events or threads can expose you to creative solutions and best practices. It’s also reassuring to know you’re not alone in encountering bugs – others have them too and they can be solved.

- Frame Your Questions Well: When you ask for help, provide context: what you’re trying to achieve, what the AI did, and what’s going wrong. Share any error messages or screenshots. The more precise your question, the more likely someone can pinpoint the answer. For example, instead of “My app doesn’t work, please help,” say “In Lovable, I built a chat app. Messages aren’t appearing for other users in real-time unless they refresh. I’m using Supabase; any idea why the subscription might not be working?” This invites targeted advice (maybe about checking realtime config or how Lovable handles sockets).

- Open Source Solutions and Templates: The Lovable community might have shared templates or projects (some sources mention “pre-debugged UI templates”). Reviewing these can be instructive. If a template has a feature similar to yours, compare how it’s implemented there versus your app. You might spot differences or missing pieces.

- General Developer Communities: If it’s a general coding issue (like a React error, a CSS bug, or an API usage question), platforms like Stack Overflow, Dev.to, or Hashnode can be useful. You don’t even have to specify that it’s AI-generated code when asking – if you have the code snippet, it behaves like any code. Just ensure you’re not sharing anything sensitive or proprietary from your app.

- Learn from Others’ Mistakes: Reading community posts proactively can also help. Someone might post “10 lessons learned debugging with Lovable AI” or share common pitfalls (for instance, a Reddit user complaining about authentication issues and getting advice on how to avoid them). These discussions can alert you to things you haven’t run into yet, so you can avoid them or be prepared.

- Give Back: As you become more proficient, try to help others in the community too. Teaching and advising is a great way to solidify your own understanding. Plus, it fosters a supportive environment where everyone benefits. Today you ask a question, tomorrow you might answer someone else’s.

Collaboration is key in debugging because a fresh set of eyes can see what you overlooked. An AI might not have fixed a bug because it’s stuck in a certain logic, whereas a human peer might immediately recognize, say, “Oh, your if-condition is reversed”. The synergy of human insight with AI assistance is powerful.

Leveraging community resources builds confidence and trustworthiness in your workflow (remember E-E-A-T: Experience, Expertise, Authority, Trustworthiness). You show that you’re cross-verifying and enriching the AI’s output with peer-reviewed knowledge, which ultimately leads to more robust solutions.

Essential Tip 9: Implement Manual Reviews and Testing

Even though we have an AI doing the heavy lifting in code generation, the responsibility of ensuring the code is solid rests with us, the creators. That means manual code review and testing are non-negotiable essential steps.

Why manual review? Because AI can make mistakes that only a human eye or thoughtful testing can catch. Here’s what to do:

- Read the Code (Yes, Really!): If you’re non-technical, this can be daunting, but try to read through the AI-generated code for critical features. You don’t have to understand every line deeply, but look for red flags: functions that are excessively long or complex, duplicate blocks of code (the same logic repeated in multiple places), or sections labelled “TODO” or comments the AI might have left. These can indicate areas that need attention. If you are technical, do a full code review: does the structure make sense, are there obvious inefficiencies or risky operations?

- Code Review Tools: If you have the code in GitHub, use its PR review features on the AI’s commits. This can help you comment and keep track of what needs change. It’s a bit meta – reviewing AI code as if it came from another developer – but that mindset helps maintain objectivity.

- Test, Test, Test: Execute the app and go through all flows as a user. Click every button, try to break things by entering unexpected input (like very long text, or special characters, or leaving fields blank). AI might not have accounted for those, and you’ll uncover edge case bugs (e.g., the app crashes if you submit a form with an empty optional field – maybe the AI didn’t consider that scenario).

- Cross-Device and Cross-User Testing: If your app has roles or different permission levels, test each role. If it’s a responsive web app, test on mobile vs desktop. If possible, involve another person to test concurrently (to see issues in real-time collaboration features, for instance).

- Security Checks: AI might inadvertently introduce security issues – like not sanitizing user input or hardcoding a secret. Review these aspects. Ensure that any API keys or credentials are stored properly (Lovable likely has secure storage for those). Check that restricted actions truly require proper authentication (for example, try accessing an admin page without logging in – does the app prevent it? If not, the AI missed that check).

- Performance and Load Testing: If your app is data-heavy or used by many users, simulate load or at least consider performance. AI code can be less efficient; maybe it pulls a large list from the database when you only need a subset. Such inefficiency might not be a “bug” at small scale, but can become one under load (slowness or crashes). Identify these by reviewing logic for any obvious heavy operations in loops or too-frequent network calls.

- Use Testing Frameworks (if feasible): Depending on how Lovable’s environment is set up, writing automated tests might not be straightforward. But if you’ve exported code or have a modular way to test parts (like pure functions), writing a few unit tests can catch issues. For example, if there’s a critical calculation or transformation, a simple test can ensure it works for various inputs. This isn’t always available in no-code, but it’s worth mentioning as a gold standard in debugging.

- Document Known Issues: If you discover minor bugs that are not showstoppers (or decide to fix them later), document them. Keep a log or use an issue tracker. This helps ensure they don’t get forgotten. Also, documenting weird AI behaviors (like “AI tends to reset this state on page reload, investigate later”) can be important if those quirks resurface.

A strong testing and review regimen builds Trustworthiness (again referring to E-E-A-T). It shows that beyond trusting the AI, you verify everything. Users of your app ultimately won’t care if it was coded by AI or humans – they care that it works reliably and securely. By manually reviewing and testing, you are putting your stamp of approval on the AI’s work.

Yes, it’s additional effort, but think of the AI as an assistant who writes the first draft. The final responsibility of quality assurance lies with the human in the loop – that’s you. Many times you’ll find the AI did a great job, but those few times it didn’t, you’ll be very glad you checked.

Essential Tip 10: Iterate, Learn, and Document Fixes

Debugging is rarely a one-and-done affair, especially with AI in the mix. Bugs have a way of revealing deeper insights about your project and the AI’s behavior. The final tip is about iteration and continuous learning. Embrace the mindset that each fix is part of an ongoing improvement process for both your app and your skills.

Here’s how to extract maximum value from each debugging cycle:

- Apply Fixes Incrementally: When you have a potential solution, implement it and test again, rather than bundling multiple changes at once. This way, you can be confident about which change solved the problem (or if it didn’t, you know that particular attempt failed). Lovable’s approach of implementing feature by feature is in line with this – treat bug fixes the same way: one at a time, with validation in between.

- Observe AI’s Response: If you involve the AI (via Chat Mode or another prompt) in fixing a bug, watch what it does closely. You might notice patterns: for example, the AI may frequently fix a bug by doing X, but that tends to cause a minor issue Y as a side effect. Recognizing this means next time you can preempt side effect Y.

- Learn for Future Prompts: Perhaps you found that a certain phrasing in your prompt led the AI astray. Make a note of it. For instance, if saying “make it look nice” caused a heavy use of some complicated CSS that broke something, next time you’ll know to be more specific instead of using subjective terms like “nice”. Over time, your prompt engineering skills improve, and you’ll encounter fewer bugs to begin with.

- Maintain a Debug Journal: This doesn’t have to be formal, but keep a document (or even a running note) of the major bugs you fixed, what caused them, and how you fixed them. This is incredibly useful if you take a break from the project and come back later, or for anyone else who might work on the project. It’s also a personal knowledge base – reviewing it solidifies the lessons. For example, an entry might read: “Signup form bug – caused by missing state update after API call. Fixed by adding setUser(data) in the success callback. Learned: AI didn’t automatically handle state update from API response.”

- Celebrate and Reflect: When you do fix a tough bug, take a moment to appreciate it. Not only is your app working better, but you also gained experience. Debugging AI-generated code is a new frontier, and you’re on it! Reflect on what made that bug hard and how you eventually cracked it. Perhaps it was patience, or trying a different approach, or seeking help – all these are valid strategies to remember.

- Stay Optimistic and Curious: Maintaining an optimistic tone is not just for writing style – it genuinely helps in debugging. AI can sometimes throw wacky problems at you, but viewing them as puzzles rather than nightmares keeps you motivated. A phrase to remember is: “Bugs aren’t failures – they’re a pathway to better code and stronger skills.” Each bug you conquer with Lovable AI makes you more proficient with the tool and more skilled as a problem solver.

- Keep Up with Updates: As Lovable AI evolves, new features or fixes might come out that change how debugging is done. Maybe a better logging system, or improved AI reasoning to avoid certain bugs. Keep an eye on release notes or community chatter about updates. Adapting your debugging approach to the tool’s evolution is important (for example, if a new “AI Debug Assistant” is introduced, you’d want to incorporate that into your workflow).

In essence, Essential Tips for debugging could go far beyond 10 items – but if you internalize a process of iterative improvement, you’ll naturally create more tips of your own. This tenth tip is a reminder that debugging isn’t just about the immediate fix in front of you; it’s part of a continuous cycle of development. With each iteration, both you and the AI get better: you give better instructions, and the AI (through better context and guidance) produces better outcomes.

By documenting and learning from each issue, you’re not just fixing today’s bug – you’re investing in a smoother development journey in the future. And who knows, maybe you’ll write your own blog post or guide someday, teaching others how to debug AI-generated code, armed with the wisdom you’ve accumulated.

FAQs

Q1: Why is debugging AI-generated code with Lovable AI different from traditional debugging?

A: In many ways, it’s similar – you still check logs, isolate problems, and test fixes. The difference is you have an AI partner involved. Lovable AI might introduce unconventional code patterns or minor errors that a seasoned human coder might not. On the flip side, Lovable provides AI-driven tools (like “Try to Fix” and Chat Mode) to help debug. You’re debugging with the AI’s help, which is a new dynamic. It’s important to be methodical and not assume the AI’s code is flawless. Think of the AI as an assistant who writes the first draft – you still need to review and refine that draft.

Q2: The “Try to Fix” button isn’t solving my problem. What should I do next?

A: If “Try to Fix” fails, it’s time for deeper investigation. First, read the error message or observe what changed after the AI’s fix attempt. Often, Lovable will either not change anything (meaning it couldn’t identify the issue) or it changes something that leads to a new error. Use that information to guide your next steps. Switch to Chat Mode and ask Lovable’s AI for insight on why the issue persists. You can also manually inspect the code around where the error occurs. Advanced tip: consider reverting to a prior version (Lovable might have a version history or you might have it on Git) and try implementing a fix step by step to see where it goes wrong. Remember, the “Try to Fix” is a quick helper, but not a magic wand – complex bugs will need your guidance to resolve.

Q3: How can I prevent common AI-generated bugs in the first place?

A: Great question! Preventative measures include writing clear, detailed prompts and planning your app’s structure upfront. Use Lovable’s Knowledge File to give the AI full context. Follow best practices like building features in logical order (layout → backend → auth → features), which prevents the AI from making a mess by bolting on critical components late. Also, specify constraints (e.g., what technologies to use or avoid) and explicitly mention edge cases in your instructions. Essentially, the more guidance you give the AI, the fewer assumptions it will make – and assumptions are often the cause of bugs. Finally, incremental development and testing (add one feature, test it, then add next) will catch issues early before they compound.

Q4: My Lovable AI project has an error loop (it keeps failing in a similar way even after fixes). How do I break out of it?

A: Error loops can be frustrating. To break the cycle, try these steps: (1) Stop hitting “fix” repeatedly – if it failed a couple of times, continuing the same approach may not help. (2) Switch to Chat Mode and have a conversation describing the loop: e.g., “Each time I fix X, Y breaks. Why might that be?” The AI might analyze the broader context and suggest a different angle. (3) Consider rolling back to the last stable version of your app (Lovable might have a revert function or you may manually undo some changes). Start reapplying changes in smaller increments to pinpoint what triggers the loop. (4) As a more drastic measure, export the project or code and try running it in a local environment – sometimes a different environment or additional debugging tools can reveal hidden issues. And of course, don’t hesitate to ask the community if they’ve encountered similar loops; they might have specific guidance if it’s a known quirk.

Q5: How do I ensure the AI’s fixes or code changes don’t introduce new bugs elsewhere?

A: This is a classic challenge known as regression. The best defense is comprehensive testing after each significant change. Whenever Lovable’s AI fixes something, run through all the main features of your app again, not just the scenario that was broken. It’s a bit of work, but it catches issues early. Using source control (Git) helps because you can review exactly what changed in the fix and scrutinize it. You can also write down a test checklist for your app – a list of things to quickly verify (login, create data, view data, etc.). Run through that list after changes. If something new broke, you’ll spot it and can address it before moving on. Also, communicate constraints to the AI: for example, when asking for a fix, say “fix X without altering Y functionality”. While the AI might not always perfectly obey, it tends to respect direct instructions, which reduces collateral damage.

Q6: My AI-generated code works but is quite messy/hard to understand. Should I worry about cleaning it?

A: If it ain’t broke, you might be tempted not to fix it – but maintainability is important. Messy code can hide bugs or make future changes riskier. It’s a good idea to refactor and clean up if you plan to expand or keep the project long-term. You can do this in two ways: manually or via the AI. Manually, you’d go through and simplify the code (perhaps extracting repeated code into functions, adding comments, renaming confusing variables). If you’re not comfortable doing that by hand, use Lovable’s Chat Mode to ask for a refactor. For example: “Clean up the code for the dashboard component without changing its functionality.” The AI might restructure it more clearly (always test after refactoring to ensure it still works!). Clean code will make future debugging much easier and also help others understand the project. Plus, as you clean up, you might spot latent bugs or inefficiencies to fix proactively.

Q7: What if I encounter a bug that I just can’t figure out?

A: Despite all the tips, there might be times you hit a wall. Don’t get discouraged – this happens to every developer. Here are a few last-resort steps: (1) Take a break – stepping away for a few hours can refresh your perspective. (2) Rubber duck debugging – explain the problem aloud (or in writing) as if teaching someone else; this often leads to an “aha!” moment. (3) Ask for help – post the detailed issue in Lovable’s community or other developer forums. Fresh eyes can make a difference, and someone might have solved exactly what you’re facing. (4) Consider alternative approaches – maybe the bug is deeply tied to how you implemented a feature. It might be easier to approach the feature in a different way: for example, use a different library or redesign that part of the app’s flow. You can prompt the AI with “Let’s try a different approach for this feature”. (5) And if all else fails, contact Lovable’s support. There could be a chance it’s a platform bug or limitation – their team would want to know and help you if so.

These FAQs address some of the most common concerns when debugging AI-generated code with Lovable AI. Remember, every project and bug can have its nuances, but the core principles of careful observation, systematic troubleshooting, and using all available resources (human and AI) will serve you well.

Conclusion

Debugging AI-generated code with Lovable AI ultimately combines the best of both worlds: the speed and creativity of AI, and the critical thinking and intuition of human developers. By now, we’ve seen that AI accelerates development, but it doesn’t eliminate bugs – and that’s okay. With the right mindset and tools, you can turn those bugs from roadblocks into stepping stones.

Let’s recap the key takeaways:

- Embrace a Structured Approach: Start by clearly identifying problems and gathering clues (logs, errors, reproduction steps). A structured approach to debugging saves time and frustration.

- Use AI as a Partner: Leverage Lovable’s features like “Try to Fix” for quick solutions and Chat Mode for deeper insights. The AI that generated the code can often help explain or fix it – you just need to ask the right questions.

- Don’t Skip Fundamentals: Traditional debugging tools and best practices (like isolating components, printing debug info, or reviewing code diffs) are still indispensable. They ground the process and catch things that AI might miss.

- Improve Prompts and Planning: Many bugs can be prevented by giving the AI better instructions and having a solid plan. Take what you learn from each bug to refine how you communicate with the AI for the next feature.

- Test Thoroughly: Trust, but verify. Always test the AI’s output across different scenarios. This not only catches bugs but also builds your confidence in the final product’s quality.

- Continuous Learning: Every debugging session teaches you something – about Lovable, about coding, about how the AI “thinks.” Over time, you’ll develop an intuition for where AI might stumble, and you’ll preempt those issues. You’re essentially learning a new sub-discipline of development, and that’s an exciting journey.

- Stay Optimistic and Patient: Bugs can be pesky, but each one solved is a win. Instead of seeing them as setbacks, view them as part of the creative process of building software. Even seasoned developers encounter bugs daily – debugging is simply part of the craft.

By applying these essential tips, you are well on your way to becoming proficient at debugging in this AI-assisted paradigm. You’re not just fixing errors; you’re forging a collaboration with AI where you guide and mentor it to produce better and better code.

Remember, every great app – whether coded by humans, AIs, or both – is built on a heap of resolved bugs and learned lessons. So, the next time an “AI did something weird” moment occurs, you’ll know exactly how to tackle it: with structure, curiosity, and confidence.

Happy debugging, and happy building with Lovable AI!

Next Steps:

Translate – Need this article in another language? Use a translation tool or service to render “Debugging AI-Generated Code with Lovable AI: Essential Tips” in your preferred language for broader accessibility.

Generate Blog-Ready Images – Enhance your understanding and engagement by creating custom images or diagrams based on the tips (e.g., a flowchart of the debugging process, or a before/after code snapshot). These blog-ready visuals can reinforce key points and break up text for readers.

Start a New Article – Inspired by what you learned? Begin a new article or tutorial sharing your own experiences with AI-assisted coding. Whether it’s a success story, a deeper dive into Lovable AI features, or another set of tips, your insights can contribute to the community and solidify your expertise.