How AI Is Making Things Easier—But Is That Cheating?

How AI Is Making Things Easier—But Is That Cheating? A 2025 Perspective

How AI is Making Things Easier But is That Cheating? Key Takeaways

AI is transforming work and creativity in 2025—but success hinges on smart, ethical use that boosts productivity without cutting corners. Here’s what you need to know to harness AI effectively while keeping integrity front and center.

- AI dramatically accelerates productivity by automating routine tasks and enabling faster decision-making with low-code/no-code platforms that empower non-technical teams.

- Transparency and accountability are essential to avoid ethical pitfalls—always disclose AI assistance, apply critical human review, and ensure your unique input shines through.

- Reskilling is non-negotiable: expect 50% of employees to need new skills by 2025 to stay relevant; leverage AI-powered personalized training for smooth transitions.

- Mitigate algorithmic bias with diverse datasets, continuous audits, and fairness-aware algorithms to build trustworthy AI that serves all users equally.

- Protect privacy by design using tools like differential privacy and federated learning, plus maintain strict data governance and transparent practices to safeguard sensitive information.

- Human oversight remains critical—combine AI’s speed with expert judgment to prevent errors and uphold accountability, especially in high-risk domains.

- Use AI as a creative collaborator, not a replacement; retain authorship credit and apply your unique perspective to AI-generated content for authentic, ethical originality.

- Lead with curiosity and ethics by experimenting boldly but embedding transparency, bias audits, and human-in-the-loop systems to build AI-powered growth that’s fast and fair.

Dive into the full article to explore practical AI strategies that help your startup or SMB innovate at scale—while keeping ethical boundaries clear and your competitive edge sharp.

Introduction

What if your to-do list could shrink by half without sacrificing quality? That’s the promise AI is delivering for startups and SMBs in 2025, but it also raises a tricky question: When does AI make work easier—and when does it cross the line into cheating?

Every day, AI tools are transforming how businesses automate routine tasks, speed up decision-making, and trim development cycles through low-code platforms. As technology rapidly evolves, advancements like AI are changing the way we work and learn, requiring new approaches to digital literacy and adaptation. Imagine freeing your team from repetitive chores, gaining instant insights, and launching products faster—all without hiring more people.

But success with AI isn’t just about convenience. Used responsibly, AI is a great tool for productivity and learning, helping teams brainstorm, study, and engage more deeply with their work. It’s about striking the right balance between boosting efficiency, maintaining originality, and navigating ethical boundaries that keep your work trustworthy and authentic.

In this article, you’ll explore:

- How AI is reshaping productivity across roles and industries

- The fine line between leveraging AI assistance and risking overdependence

- Practical strategies to use AI responsibly without losing your unique voice or control

Understanding this balance is vital if you want AI to be a powerful partner, not a shortcut that undermines integrity or long-term value.

As AI tools become more accessible and integral to rapid development, having a clear ethical framework and practical know-how will help you scale smartly—making every AI-driven advantage count without compromise.

We’ll start by unpacking how AI is streamlining everyday workflows and boosting productivity in practical ways that any team can implement today.

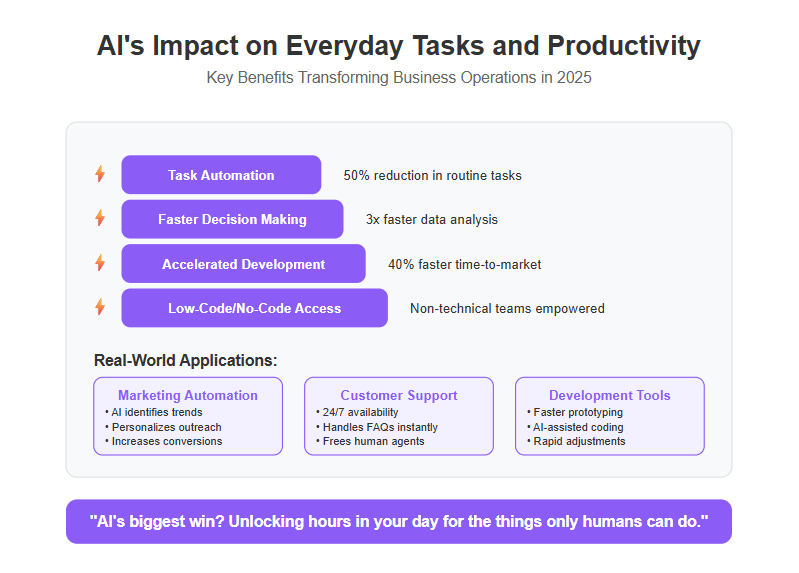

AI’s Impact on Everyday Tasks and Productivity in 2025

AI is no longer just futuristic hype—it’s actively streamlining workflows across startups, SMBs, and enterprises in 2025.

From automating repetitive chores to boosting decision-making speed, AI is accelerating how work gets done and slashing time-to-market.

Core Productivity Gains Powered by AI

Businesses are seeing clear benefits like:

- Automating routine tasks such as data entry, scheduling, and reporting

- Accelerating decisions by analyzing data instantly and suggesting options

- Cutting development cycles with low-code/no-code platforms powered by AI, opening doors for non-technical teams

Imagine a marketing manager starting their day with AI-generated campaign insights, customer support bots resolving common tickets instantly, or developers shipping apps faster using AI-assisted coding tools. These aren’t just ideas—they’re everyday realities.

Practical AI Applications Across Industries

Here are straightforward examples where AI is making life easier:

- Marketing automation: AI identifies trends and personalizes outreach to increase conversions

- Customer support bots: Ready 24/7 to handle FAQs, freeing human agents for complex cases

- Low-code platforms: Enable faster prototyping and adjustments without heavy coding

For a deeper dive, check out “7 Strategic Ways AI Is Simplifying Work and Life in 2025” — a practical guide packed with actionable tactics any team can apply.

AI: A Partner, Not a Replacement

Far from stealing jobs or creativity, AI should be seen as an empowerment tool—handling the boring stuff so you focus on strategy, innovation, and authentic human connections.

While AI can simulate aspects of human intelligence, it does not replace human thinking or creativity; instead, it complements your unique abilities.

Think of it like having a super reliable assistant who never sleeps but still leaves you in charge of the vision.

“You don’t have to do it all yourself anymore—AI lets you work smarter, not harder.”

“AI’s biggest win? Unlocking hours in your day for the things only humans can do.”

Practical, accessible AI usage is becoming the baseline for competitive SMBs and startups who want to scale without burning out.

AI’s biggest promise today is simple: freeing your time from mechanical toil to focus on what matters most. Whether you’re launching your first product or scaling fast, AI-driven productivity gains are a game-changer you can’t afford to ignore.

Navigating the Ethical Landscape: When Does AI Assistance Cross Into Cheating?

The line between legitimate AI assistance and outright cheating is fuzzier than it seems—especially in fast-moving professional and educational scenes. With the rise of students using AI to cheat, concerns about academic integrity are growing. It’s important to clarify what is considered cheating: using AI for proofreading or idea generation is generally acceptable, but relying on AI to create entire assignments is often considered cheating and crosses ethical boundaries. Using AI tools isn’t inherently wrong, but the question is: how much help becomes too much?

Defining Cheating in the Age of AI

Cheating typically means relying so heavily on AI that the output no longer reflects your own effort or originality. This can happen through:

- Submitting AI-generated content without disclosure

- Using AI to automate core intellectual tasks without review

- Passing AI work off as your own without critical input or edits

- Submitting an entire paper generated by AI as your own, which is widely considered crossing the line into cheating

Understanding this helps you stay on the right side of integrity.

Real-World Ethical Dilemmas

Picture a marketing team using AI to draft blog posts. The risk: over-dependence might reduce unique brand voice.

Or imagine a student using AI to complete an assignment, such as writing essays—where does help become plagiarism? The ethical implications of using AI for assignments raise questions about academic integrity and the importance of submitting original work.

In software development, blindly accepting AI-generated code without testing can introduce errors or violate licensing laws if proper attribution is missing.

Key Considerations

Navigating this balance boils down to:

- Dependency: Are you using AI as a tool or a crutch?

- Originality: Is your unique input evident in the final product?

- Credit: Do you acknowledge AI’s role where appropriate?

These points keep AI helpful rather than harmful.

Practical Guidelines for Ethical AI Use

To foster responsible AI adoption without killing innovation, try:

Transparency: Always disclose AI involvement when sharing work externally.

Review: Use AI-generated output as a draft, then apply your own critical thinking and edits.

Policies: Implement clear organizational standards on what’s acceptable AI use, and ensure these policies are designed to uphold academic integrity.

Education: Train teams on ethical AI usage and attribution best practices, emphasizing the importance of upholding academic integrity when using AI tools.

Key Takeaways

- AI can amplify your work—but don’t outsource your ethics.

- Disclosure and accountability build trust in AI-assisted results.

- Use AI to enhance, not replace, your unique skills and voice.

Picture this: a content creator reviews AI drafts like a trusted assistant—improving speed without losing authenticity.

Navigating AI ethics isn’t just about preventing cheating—it’s about empowering smarter, fairer collaboration between humans and machines.

Finally, always review and edit AI-generated content to ensure it accurately reflects your own ideas and personal style.

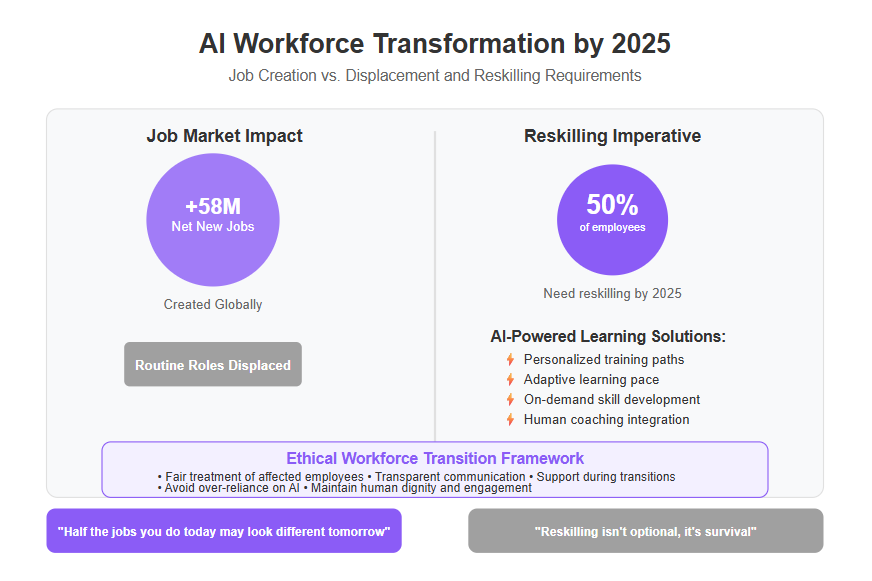

AI and Workforce Transformation: Reskilling, Job Displacement, and New Opportunities

Understanding AI-driven Job Displacement

By 2025, AI-driven automation is projected to create 58 million net new jobs globally—while simultaneously displacing many routine, repetitive roles.

This shift means startups and SMBs face the challenge of balancing automation gains with workforce stability.

Picture a small software firm adopting AI to speed development: they gain efficiency but must thoughtfully manage changing job roles to keep their team engaged and productive.

The Reskilling Imperative

Around 50% of employees will need reskilling by 2025 to stay competitive in this evolving AI landscape.

AI doesn’t just replace jobs—it also powers personalized learning paths that adapt training to each employee’s pace and style.

Startups and SMBs should consider these strategies to deploy reskilling without causing disruption:

- Embed AI-powered training tools for on-demand, relevant skills development

- Design modular learning programs enabling incremental skill upgrades

- Pair AI insights with human coaching for deeper engagement

These approaches speed up workforce adaptability and reduce downtime during transitions.

Ethical Responsibilities in Workforce Transition

As automation accelerates, ethical concerns around job displacement and social impact grow louder.

Leaders must embrace automation’s benefits while also addressing:

- Fair treatment of affected employees

- Transparent communication and support during transitions

- Avoidance of over-reliance on AI that may alienate or marginalize workers

The framework outlined in “Why Workplace AI Integration Is Revolutionizing Jobs Without ‘Cheating’” highlights the importance of ethical, fair AI adoption that empowers—not replaces—human roles.

Supporting your team through change isn’t just the right thing to do—it’s smart business.

AI is reshaping jobs, but it’s also creating opportunities—especially for organizations that prioritize reskilling and ethical workforce strategies.

Invest in adaptable skill-building and transparent practices now to thrive alongside AI, not get left behind.

“Half the jobs you do today may look different tomorrow; reskilling isn’t optional, it’s survival.”

“Automation is a tool—balance its power with respect for people to unlock true innovation.”

Tackling Algorithmic Bias and Ensuring Fairness in AI Systems

AI systems often inherit the biases present in their training data, leading to unfair or inaccurate outcomes in the real world. For example, facial recognition tools have shown error rates up to 35% higher for certain demographic groups, raising serious concerns about discrimination.

Why Diverse Data and Audits Matter

To combat this, developers must prioritize:

- Diverse datasets that reflect real-world populations

- Continuous bias audits to catch emerging issues before deployment

- Maintaining fairness requires vigilance—not a one-time fix

This proactive approach helps avoid harmful errors that can alienate users and damage reputations.

Methods to Reduce Bias in AI

Several effective strategies are emerging:

Fairness-aware algorithms: These are designed to identify and correct biases during processing.

Inclusive design principles: Building AI tools with diverse teams and ethical frameworks upfront.

Regular updates and retraining to address shifts in data patterns or societal changes.

Together, these tactics form a strong defense against unintentional discrimination.

Corporate Accountability and Regulation

Regulation is catching up fast. The EU’s Artificial Intelligence Act, effective since August 2024, mandates that companies:

- Conduct risk assessments focused on bias and fairness

- Provide documentation proving efforts to reduce discrimination

- Maintain clear accountability for AI outcomes

Meanwhile, initiatives like the Council of Europe’s 2024 Framework Convention spotlight the global push for ethical AI governance.

Visualize This:

Picture an AI hiring tool screening thousands of resumes. Without proper bias checks, it might favor certain backgrounds unfairly—costing companies diverse talent and exposing them to legal risks.

Memorable Takeaways:

- Bias in AI is a reflection, not a glitch; addressing it requires deliberate action.

- Diverse teams and datasets are essential starting points for fair AI.

- Regulatory compliance is no longer optional—it’s a business imperative.

Tackling algorithmic bias isn’t just ethics; it’s about building trustworthy AI that drives real value for your startup or SMB today and tomorrow.

Safeguarding Data Privacy and Security in an AI-Driven World

AI’s hunger for vast, sensitive datasets creates serious privacy and security challenges for businesses. Handling personal info without exposing it to risks like data breaches is critical—especially for startups and SMBs scaling fast.

Navigating Key Privacy Frameworks

Complying with laws like GDPR isn’t just legal housekeeping. It shapes how AI solutions collect, use, and store data.

- GDPR enforces strict consent rules and data minimization.

- Non-compliance risks fines up to 4% of annual global turnover.

- It pushes companies toward transparent, customer-first data policies.

For SMBs, integrating GDPR requirements early can prevent costly retrofits and build trust.

Cutting-Edge Privacy-Preserving Tech

Modern AI isn’t just about more data—it’s about handling it smarter with privacy intact. Two game-changers:

- Differential privacy: Adds calculated noise to datasets, masking individual details without losing insights.

- Federated learning: Trains AI models across multiple devices or servers without centralized data pooling, keeping data local and secure.

These methods let AI learn and improve without exposing sensitive information—a must-have for companies wanting to innovate responsibly.

Practical Steps for SMBs and Startups

You don’t need a massive budget to keep data safe and customers happy. Focus on:

Establishing clear data governance policies that define who accesses what and when.

Implementing regular security audits and updating controls frequently.

Prioritizing transparency by clearly communicating how you collect and use data.

These simple actions can dramatically reduce risks without slowing development pace.

Building Customer Trust Through Transparency

Imagine your customer wondering, “Is my data safe here?” Being open about your AI’s data use is a direct answer that fosters loyalty.

- Share clear, understandable privacy notices.

- Offer easy options for users to control their data.

- Publicize your adherence to privacy standards and audits.

Transparency isn’t just ethical—it’s a strategic advantage in an AI-driven market.

AI’s power depends on trustworthy data practices. Startups and SMBs that prioritize privacy-preserving tech, compliance, and transparency won’t just avoid costly pitfalls—they’ll build stronger, more loyal customer relationships.

“Protecting data isn’t a checkbox—it’s the foundation of lasting innovation.”

“Smart AI respects privacy, blends security, and still sparks growth.”

“Transparency turns cautious users into lifelong advocates.”

Whether you’re launching your first SaaS or scaling globally, embedding these privacy principles is your best move to keep AI-driven growth both bold and responsible.

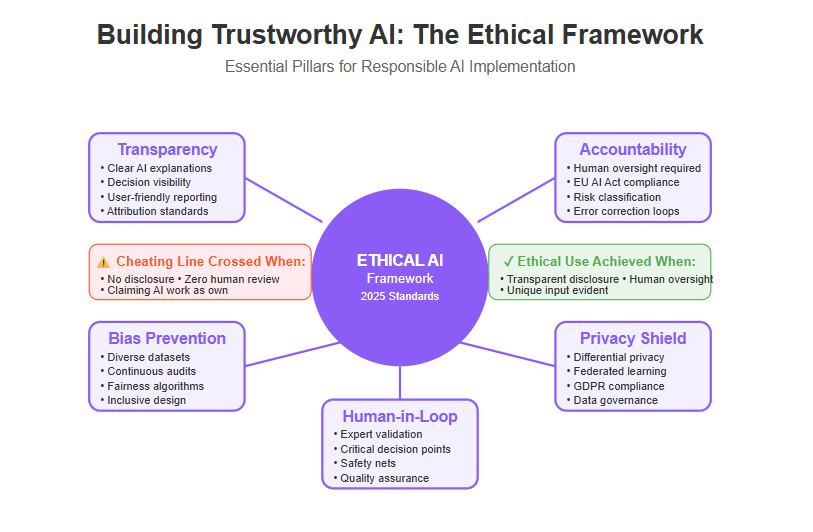

Transparency, Accountability, and Human Oversight: Building Trustworthy AI

Transparency in AI Decisions

Clear explanations of AI outputs aren’t just nice to have—they’re essential for trust among users and regulators. When AI makes recommendations or decisions, people need to understand how it arrived there.

Key ways to boost transparency include:

- Explainable AI techniques, which break down complex algorithms into understandable terms

- User-friendly reporting, offering straightforward insights tailored for non-technical audiences

Picture this: You’re using an AI tool that suggests marketing strategies. Without transparency, you’re stuck trusting a “black box.” But with clear explanation layers, you feel confident making informed moves faster.

Accountability and Regulatory Drivers

The European AI Act, effective since August 2024, sets a global standard for holding AI providers accountable. It mandates:

- Human oversight for high-risk AI systems to prevent blind trust in automation

- Measures to address automation bias, avoiding unequal or skewed AI outcomes

- Risk classification that guides proper AI deployment based on context

Meanwhile, global frameworks like the Council of Europe’s Convention promote ethics and governance across borders, ensuring AI respects democracy and human rights.

The Need for Human-in-the-Loop Systems

Automation is powerful but dangerous without human judgment. Combining AI with human expertise prevents costly mistakes, especially in sensitive areas like healthcare and finance.

Real-world examples show:

- Healthcare systems where doctors validate AI diagnoses, reducing errors

- Financial services using AI to spot fraud but relying on analysts for final decisions

This hybrid approach creates a safety net, blending AI's speed with human wisdom to keep operations smooth and ethical.

Transparent AI builds trust by making complex tech understandable and accountable. Pairing AI’s muscle with your judgment creates smarter, safer decisions every time. As AI keeps advancing, keeping humans in the loop isn’t just a precaution — it’s the new smart play.

“Trustworthy AI means nobody’s left guessing how decisions were made.”

“Accountability isn’t optional—it’s the foundation for ethical AI.”

“Human oversight transforms AI from a tool into a partner.”

Unlocking AI’s Potential in Education Without Encouraging Laziness

AI is transforming education by personalizing learning and automating repetitive tasks—but it walks a fine line between support and dependency. Schools and classes are rapidly adapting to the integration of AI, with kids now experiencing new learning environments shaped by these technologies.

Personalized Learning Meets Active Engagement

Modern AI tools adjust lessons based on individual skills and pace, making it easier to fill knowledge gaps quickly. AI chatbots, in particular, offer personalized tutoring and support, helping students with one-on-one assistance tailored to their needs.

At the same time, AI can:

- Automate grading of quizzes and assignments, freeing teachers to focus on mentoring

- Generate practice exercises tailored to weak spots

- Provide instant feedback that encourages learners to reflect and improve

AI chatbots can also supplement or even replace a traditional course, making education more accessible and democratizing personalized learning experiences.

Picture this: a student uses an AI tutor to identify tricky topics, practices with custom quizzes, and gets immediate tips—all without doing the same drill blindly. AI is now being integrated into different types of classes to enhance learning.

Balancing Assistance and Academic Integrity

The rise of AI also stirs worries about plagiarism and academic dishonesty—is AI just a cheat tool? Many students use AI to complete assignments and homework, sometimes generating entire papers or copying every word, which raises concerns about students cheating and the importance of maintaining proper grammar and original work. Teachers are concerned about students cheating by using AI to write assignments, and schools are adapting their policies to address these challenges. AI-generated material and content from internet sources can blur the line between genuine research and plagiarism, making it harder to detect when students pass off AI-generated content as their own work. Generative AI has made it easier for students to cheat, prompting educators to rethink how assignments are taught and assessed, and to develop new approaches to uphold academic integrity.

To keep AI use ethical:

- Educators need clear guidelines defining appropriate AI support, including what is considered cheating and the importance of students producing their own work

- Tools that track and attribute AI-generated content help maintain transparency, especially for students who've been flagged for using AI to write an entire paper

- Encouraging students to use AI as an aid, not a shortcut, builds stronger skills over time and supports the development of original work

For example, AI writing assistants and chatbots like Grammarly are supposed to help with grammar and writing, but using them to write an entire paper is considered cheating. The challenge of detecting when students use AI to write every word of an assignment highlights the need for new strategies to maintain academic integrity. While AI can support research and improve grammar, students must be taught to use these tools responsibly and ensure their assignments reflect their own understanding and effort.

Leveraging AI as a Collaborative Partner

Successful AI integration means viewing it as a co-pilot—not a crutch.

Educators and institutions can:

Embed AI-powered platforms that boost engagement without replacing critical thinking

Offer workshops on responsible AI use to set expectations early

Use AI-generated insights to tailor teaching strategies in real time

This mix helps students stay curious and accountable while tapping into AI’s power.

In 2025, the key is empowering learners with AI tools that amplify effort and creativity—without letting convenience turn into complacency.

“AI should inspire your best work, not do it for you.”

“When AI helps learn smarter, not lazier, education wins.”

“Think of AI as the ultimate study buddy—not the ghostwriter.”

The immediate takeaway: use AI tools that personalize and streamline learning, but insist on human oversight and active participation to keep education honest and effective.

Have you used AI in your classroom or studies? Share your story about how AI has impacted your educational experience—your personal perspective can help others understand real-world applications.

Innovative AI Tools Transforming Creativity While Respecting Originality

AI-powered tools are reshaping creative work at an unprecedented pace in 2025. From writing aids that help structure ideas to design assistants and music generators, these applications are boosting productivity while sparking inspiration.

Leading AI Creative Tools in Action

Here are five standout AI applications changing creative workflows today:

- Writing aides that suggest content improvements and speed up editing

- Design assistants automating repetitive graphic tasks and generating fresh concepts

- Music generators creating royalty-free tracks tailored to mood and style

- Video editing bots that automate routine cuts and visual effects

- Idea brainstormers using language models to spark innovation

Each tool specializes in reducing grunt work so creators can focus on vision and storytelling.

Preserving Creative Ownership Amid Automation

It’s easy to ask: When does AI cross the line from helper to creative overrider? Balancing AI’s role with artistic control means:

- Retaining authorship credit and transparency about AI’s contribution

- Using AI as a collaborator rather than a creator who replaces human input

- Recognizing AI-generated outputs as starting points—not finished masterpieces

In practice, this might look like an AI-assisted draft that a creator refines, or design elements generated by AI integrated into a human-crafted layout.

Ethical Use of AI-Generated Content

Professional use of AI content raises ethical flags:

- Attribution: Being clear which parts were AI-generated builds trust

- Originality: Ensuring AI doesn’t unintentionally plagiarize existing works

- Quality control: Maintaining a unique voice despite AI automation

AI tools should expand creative freedom without diluting authenticity or infringing on intellectual property.

Collaboration, Not Replacement

Think of AI tools as your creative sidekick, not a substitute. They speed up the tedious, technical steps and open new creative pathways.

Picture a graphic designer tweaking AI-suggested layouts rather than starting from scratch—which saves hours while allowing personal touches.

Takeaways for Creatives and Businesses

- Use AI to streamline workflows but always apply your unique perspective

- Maintain clear authorship and ethical transparency with AI-generated work

- Treat AI as a collaborator that augments, not replaces, your creative spark

For a deeper dive, check out “5 Cutting-edge AI Tools Transforming Creativity Without Crossing Lines”—it breaks down the best tools and ethical practices shaping creativity in 2025.

AI is unlocking new creative frontiers—but originality and responsibility remain the artists’ true north.

Strategic Outlook: Balancing Rapid AI Innovation with Ethical Fair Play

AI innovation isn’t slowing down—2025 is all about harnessing speed without losing sight of ethics and accountability. For startups and SMBs, this balance is critical to building trust and long-term success.

Trends Shaping AI Ethics and Governance

Watch for three powerful currents reshaping AI adoption:

- Regulatory frameworks like the EU’s Artificial Intelligence Act are setting clear rules on transparency and human oversight.

- Bias mitigation strategies are advancing, focusing on diverse datasets and fairness-aware algorithms to prevent discrimination.

- Hybrid human-AI models are becoming the norm, blending machine efficiency with human judgment for responsible decision-making.

These trends emphasize not just “can AI do this?” but “should AI do this—and how?”

Frameworks to Integrate AI Responsibly

To adopt AI ethically while moving fast, businesses can:

Prioritize transparency in AI outputs to build user confidence.

Establish accountability loops that ensure errors are caught and corrected.

Implement ongoing bias audits to keep models fair and inclusive.

Maintain human-in-the-loop systems, especially in sensitive areas like finance and healthcare.

Following frameworks like the Council of Europe’s AI ethics guidelines can keep your approach aligned with global best practices.

Lead with Curiosity, Accountability, and Customer Focus

Startups and SMBs have a unique edge: agility. Use it to experiment boldly but with an ethical compass. This means:

- Asking tough questions before automating critical tasks

- Documenting and owning AI-driven decisions

- Putting customer trust at the heart of every AI initiative

Picture a lean team deploying an AI tool that doubles content output while running weekly checks to avoid plagiarism and bias—a practical way to play fair and win fast.

Key Takeaways You Can Use Today

- Treat AI like a teammate, not a magician. Combine its speed with human oversight to catch mistakes early.

- Keep your AI “black box” open with clear explanations for users and stakeholders.

- Invest in simple bias-detection tools to safeguard fairness without slowing down delivery.

AI’s rapid pace calls for rapid responsibility. When you lead with transparency, fairness, and accountability, AI stops feeling like cheating and starts feeling like a trusted partner.

Those who balance curiosity with ethics today will be tomorrow’s AI pioneers—and that’s a future worth building.

Conclusion

AI is reshaping how you work, create, and innovate—unlocking tremendous productivity gains without sacrificing your unique voice or ethical standards. When used thoughtfully, AI becomes a powerful partner that frees you from routine tasks, sharpens your decision-making, and opens new doors for growth.

To harness AI’s potential while staying on the right side of integrity, keep these essentials front and center:

- Use AI as a tool to amplify your skills, not replace them—always add your perspective and judgment.

- Maintain transparency by disclosing AI involvement where relevant to build trust with clients and collaborators.

- Embrace continuous learning and reskilling to keep pace with evolving AI-driven workflows.

- Implement clear guidelines and human oversight to prevent over-reliance and ensure accountability.

- Prioritize privacy and fairness by adopting privacy-preserving technologies and bias mitigation practices.

Start applying these principles today by reviewing your current AI tools and workflows to identify where you can add more human insight or improve transparency.

Train your team on ethical AI use and draft a simple policy that balances innovation with accountability.

Explore affordable, user-friendly AI solutions that fit your business needs without overwhelming complexity.

The way forward is clear: by combining AI’s speed with your creativity and ethical compass, you’ll unlock new levels of success while staying true to your values.

“Smart AI isn’t about shortcuts—it’s your creativity amplified with integrity.”

Charge boldly into 2025 with AI as your trusted partner, and watch how much further you can go.