The Ethics of AI in Everyday Life

The Ethics of AI in Everyday Life: A 2025 Comprehensive Overview

Key Takeaways

Ethical AI isn’t just a buzzword—it’s the foundation for building trustworthy, fair, and sustainable AI solutions that power real innovation in 2025. Whether you’re developing AI for healthcare, finance, or everyday tools, these key insights help you navigate ethical challenges and turn responsibility into a competitive edge. Business leaders and private companies play a crucial role in setting and upholding ethical standards for AI, shaping the direction of responsible innovation.

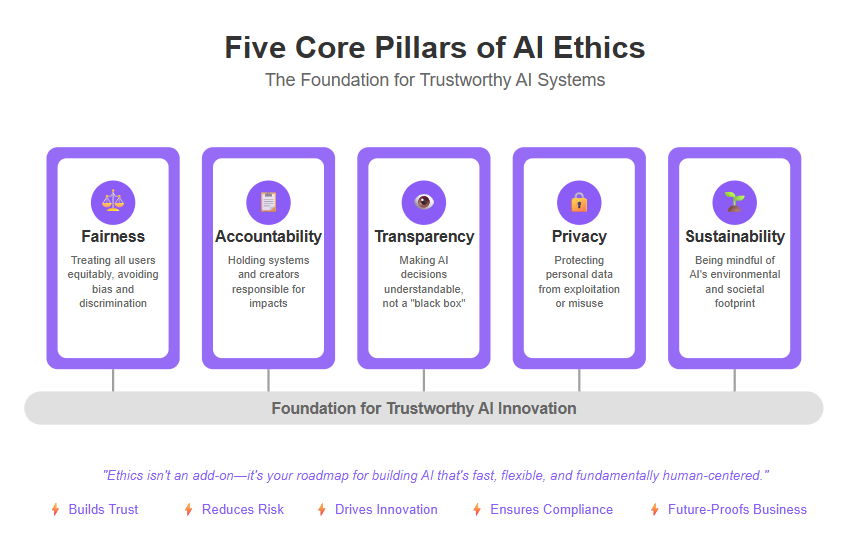

- **Anchor AI development in five core ethics pillars:**fairness, accountability, transparency, privacy, and sustainability drive trustworthy AI that respects users and society.

- Detect and mitigate algorithmic bias by using diverse data sets, inclusive teams, and ongoing audits to prevent AI from amplifying social inequalities.

- Prioritize user data privacy and autonomy through transparent disclosures, informed consent, and minimal data collection in line with evolving laws like GDPR and the EU AI Act.

- Combat the “black box” problem with explainable AI techniques, human-in-the-loop oversight, and regular auditing to maintain transparency and accountability.

- Integrate environmental ethics by choosing energy-efficient AI models and supporting carbon-neutral infrastructure to reduce AI’s substantial carbon footprint.

- Customize ethical frameworks by sector—from high-risk healthcare applications to youth platforms—balancing innovation with safety, privacy, and fairness specific to each context.

- Navigate global AI regulations proactively by understanding and adapting to regulatory frameworks that shape ethical AI practices, turning compliance into a strategic asset that builds customer trust and accelerates market access.

- Foster an ethical AI culture by educating teams, promoting ownership, and embedding continuous oversight—business leaders and private companies are key actors in promoting and implementing ethical AI so your AI products deliver value while doing right.

Ethics isn’t just a checkbox—it’s your roadmap for building AI that’s fast, flexible, and fundamentally human-centered. Dive deeper to lead AI innovation responsibly and confidently. In AI ethics, stakeholders must collaborate to examine how social, economic, and political issues intersect with AI, ensuring a holistic approach to responsible development.

Introduction

What happens when the AI tools you use every day make decisions you can’t see or fully understand?

As AI becomes a part of hiring, healthcare, marketing, and even digital spaces for kids, ethical questions aren’t just academic—they’re urgent business challenges. Startups and SMBs especially face the tightrope walk of innovating fast while navigating risks around fairness, privacy, and transparency. Companies have adopted their own version of AI ethics or an AI code of conduct despite there being no wide-scale governing body, showcasing their proactive approach to addressing these challenges. Additionally, AI technologies are expected to displace between 400 and 800 million jobs globally by 2030, underscoring the need for ethical frameworks to mitigate societal impacts.

Understanding and applying core principles like fairness, accountability, and privacy will not only protect your users but also build trust that powers lasting success. Establishing ethical guidelines is essential to provide guidelines for responsible AI use, ensuring that technological advancement aligns with societal values and minimizes risks.

You’ll discover how AI ethics touches:

- Detecting and mitigating algorithmic bias that distorts outcomes

- Protecting personal data in increasingly AI-driven experiences

- Demystifying AI’s “black box” decisions with transparency and human oversight

- Balancing AI’s innovation with its environmental footprint

- Tackling sector-specific challenges—from healthcare to youth platforms

- Navigating shifting regulations that affect development and deployment

- Safeguarding the human experience and protecting the unique qualities of human beings as we interact with and create AI systems

This overview arms you with practical insights and clear frameworks to responsibly build and use AI tools that align with your values and customers’ expectations.

Ethics isn’t a hurdle—it’s a strategic advantage in a rapidly evolving landscape where trust can make or break your product.

Next, we’ll break down the ethical foundations every AI-powered business should know, setting the stage for deeper looks into how to spot and solve real-world risks in your AI workflows.

Understanding Artificial Intelligence: Foundations and Context

Artificial intelligence (AI) is transforming the way we live and work by enabling machines to perform tasks that once required human intelligence—like learning, reasoning, and making decisions. At its core, AI is built on the foundations of computer science, mathematics, and engineering, with the ambitious goal of creating systems that can mimic or even surpass certain aspects of human thinking and behavior.

Understanding the context in which AI operates is essential for navigating its ethical implications. As AI technologies become more integrated into daily life, the decisions made by these systems can have far-reaching effects on individuals and society. This is where AI ethics comes into play: it’s the set of moral principles that guide the design, development, and deployment of artificial intelligence. These principles help ensure that AI systems are not only powerful and efficient but also aligned with human values and societal norms. Ethical review boards and committees are crucial in assessing and mitigating potential risks associated with AI technologies, ensuring that their deployment aligns with these principles. The discussion around AI ethics has progressed from being centered around academic research and non-profit organizations to include big tech companies, reflecting its growing importance in the corporate world.

The development of AI is not just a technical challenge—it’s a moral one. Every step, from data collection to algorithm design and deployment, involves choices that can impact fairness, privacy, and accountability. By grounding AI in strong ethical foundations, we can harness its potential while minimizing harm and building trust in the technology that increasingly shapes our world.

Understanding Ethical Foundations in AI Integration

AI is shaping daily life faster than ever, making ethical foundations critical to how we build and use these systems. Most of the AI we interact with today is considered weak AI—narrow systems designed for specific tasks like facial recognition or virtual assistants—distinct from more advanced forms that aim to replicate human-level intelligence.

Core Ethical Principles to Guide AI

At the core, AI ethics boil down to five pillars:

- Fairness: Treating all users and groups equitably, avoiding bias, and actively working to address biases in AI systems. This includes ensuring that services provided by AI are accessible and unbiased, regardless of age, gender, race, or other characteristics.

- Accountability: Holding systems and creators responsible for impacts, with regulatory bodies playing a key role in ensuring responsible AI

- Transparency: Making AI decisions understandable, not a “black box”

- Privacy: Protecting personal data from exploitation or misuse

- Sustainability: Being mindful of AI’s environmental and societal footprint

These principles aren’t just buzzwords—they shape how AI can be a trusted, responsible partner in your work and life.

Why Ethics Matter More Than Ever in 2025

Picture this: AI algorithms now influence hiring, healthcare, and even youth digital spaces. Without ethical guardrails, they can amplify existing inequalities, compromise privacy, and erode trust. For startups and SMBs especially, this means:

- Navigating complex ethical risks while innovating fast

- Facing increasing regulatory scrutiny (EU AI Act, US evolving laws), including strict adherence to data protection regulations and evolving regulatory frameworks

- Balancing automation benefits with human-centered values

Ethics move from a theoretical ideal to a practical necessity for staying competitive and responsible.

Common Ethical Dilemmas in AI Use

Everyday AI faces tough calls:

- When is AI’s autonomy too much?

- How to prevent biased or unfair outcomes?

- Balancing user convenience with data privacy and control?

- Addressing the challenges of algorithmic decision making, including the risk of discriminatory outcomes in areas like recruitment, lending, and criminal justice.

These questions aren’t hypothetical—they’re what real teams are wrestling with right now.

Shared Responsibility: Everyone Plays a Role

The shift to ethical AI isn’t just on developers. Businesses and users alike share accountability. It’s about:

- Developers designing inclusive, transparent models

- Businesses enforcing ethical policies and partnerships

- Users staying informed and demanding fairness

The AI community also plays a crucial role by fostering diversity and inclusiveness, which helps improve model fairness and reduce bias.

This collaborative mindset powers AI that’s not just smart, but also trustworthy and fair.

Setting the Stage for Deeper Dives

Coming up, we’ll explore:

- How algorithmic bias creeps in and how to fight it

- Privacy frameworks protecting your data in AI-powered apps

- Ways to enhance transparency, accountability, and sustainability

- Ethical challenges unique to healthcare, youth platforms, finance, and more

- Ethical assessments of AI projects and their societal impact

Think of this section as your navigation toolkit for mastering AI ethics in your daily workflow and strategic planning.

Quotable insights:

- “Ethical AI is the foundation that turns smart tech into trusted tech.”

- “Fairness, transparency, and privacy aren’t optional features—they’re the must-haves of AI in 2025.”

- “Everyone—builders, businesses, users—owns the responsibility for ethical AI.”

Imagine your AI-powered tool as a team player, not a wildcard. That’s the promise of embedding these ethics from day one.

Ethics isn’t an add-on. It’s your fast lane to innovation that lasts and earns trust along the way.

Algorithmic Bias and Ensuring Fair AI Outcomes

The Nature of Algorithmic Bias

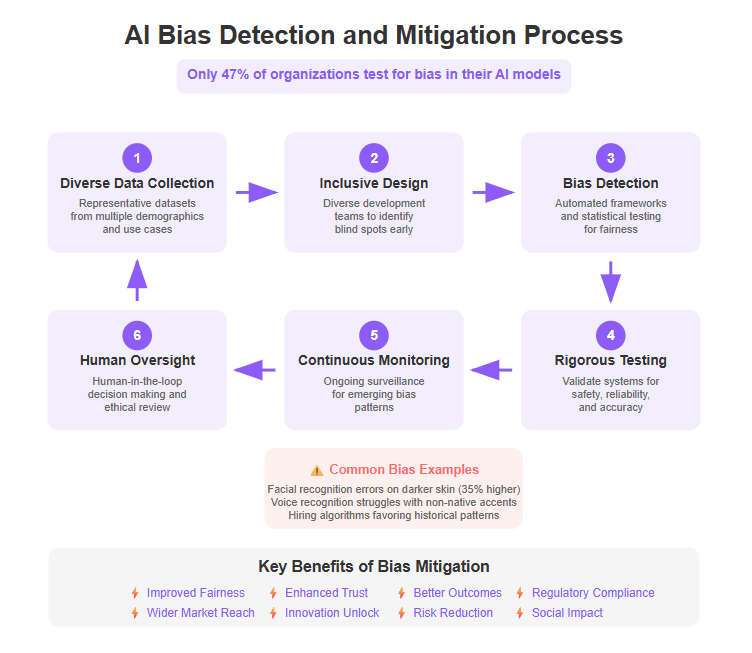

Algorithmic bias often sneaks in through unrepresentative training data, flawed assumptions, or inherited systemic inequalities. Bias can also arise when AI systems learn from historical data, which may contain and perpetuate existing prejudices or disparities. Despite the critical nature of this issue, companies often fail to test for bias in their AI models, with only 47% of organizations conducting such tests.

Imagine a facial recognition app that’s trained mostly on lighter-skinned faces—it’s more likely to misidentify people with darker skin tones. Similarly, voice recognition software has historically struggled with accents or dialects outside its main dataset.

These real-world examples are more than glitches—they highlight how biased AI can amplify social inequities, affecting hiring, lending, law enforcement, and more.

Without addressing bias, AI risks becoming a mirror that reflects and magnifies society’s unfairness rather than fixing it.

Strategies for Bias Detection and Mitigation

Addressing bias means starting with the right tools and processes:

- Bias detection frameworks help evaluate fairness by identifying patterns that discriminate against groups.

- Diverse datasets fuel AI models that understand a broader range of human experiences.

- Inclusive design involves varied teams to spot blind spots early in development.

- Rigorous testing is crucial to validate AI systems for safety, reliability, and accuracy before deployment, especially in sensitive applications.

Still, no system can guarantee 100% bias elimination. Bias can hide in unexpected places, making continuous monitoring essential.

And while tools improve, combining automated checks with human judgment creates a more balanced approach.

Linking to Sub-Page: The Role of Bias Detection in Creating Fair AI Solutions

For those wanting practical, technical strategies and hands-on tools, our deep dive on bias detection breaks down how you can build fairer AI systems step-by-step.

Bias in AI is like an echo chamber—it reflects what’s fed into it. By actively detecting bias and diversifying data, you help quiet harmful echoes and instead shape AI that treats everyone fairly.

Remember: Diverse data and inclusive teams don’t just improve fairness—they unlock innovation and trust.

Picture this: a startup deploying a hiring tool that actually widens the talent pool instead of shrinking it—isn’t that the future worth building?

Privacy, Data Protection, and User Autonomy in Everyday AI

The Privacy Risks of AI-Driven Personalization

AI collects vast amounts of personal data from everything you do online—smartphones, apps, smart home devices, and more. Increasingly, this data is processed by large language models, which use it to generate and tailor personalized content and responses. This constant data harvest fuels personalized experiences but brings a catch: it creates a fragile line between convenience and surveillance. Mass surveillance practices pose significant concerns for privacy rights, as they can lead to intrusive monitoring and misuse of personal data.

This constant data harvest fuels personalized experiences but brings a catch: it creates a fragile line between convenience and surveillance.

Picture this: your AI assistant knows your habits so well it predicts your needs—but it also builds detailed profiles that can be exploited or shared without your clear consent.

Common issues include:

- Algorithmic profiling that shapes what you see and who you interact with

- Data exploitation for targeted ads or worse, manipulation

- Erosion of user autonomy as decisions subtly shift without transparency

One study found facial recognition systems misidentify darker-skinned individuals up to 35% more often, showing how data gaps can harm users in privacy and fairness.

Ethical Data Handling and Regulatory Context

Handling data ethically means being upfront about what’s collected, how it’s used, and giving users control.

Here are best practices for businesses and developers:

Transparent data use disclosures that are clear and accessible

Obtaining informed consent before collecting or processing personal data

Minimizing data exposure by collecting only what’s necessary

Regulatory landscapes are evolving fast. The EU’s GDPR has set the gold standard for privacy rights, influencing new U.S. legislation focusing on informed consent and data minimization. Regulatory frameworks play a crucial role in shaping ethical data practices by establishing guidelines and standards that ensure transparency, accountability, and compliance with data protection regulations.

AI developers increasingly adopt privacy by design—embedding protections into product architecture rather than add-ons.

Think of it like designing a home with built-in locks and window shields instead of just adding alarms after a break-in.

Linking to Sub-Page: Unlocking Privacy: Protecting Personal Data in AI Interactions

For deeper dives and practical tactics, explore how organizations balance powerful AI tech with protecting your rights.

Privacy in AI today is about managing the tension between powerful personalization and respecting your data autonomy.

By demanding transparency, insisting on control, and following emerging laws like GDPR, you can protect your privacy without giving up the benefits AI offers.

Remember: Ethical AI treats your data as a trust, not a resource to mine.

Enhancing Transparency and Accountability in AI Systems

The Challenge of “Black Box” AI

Many AI models operate as “black boxes,” producing outcomes without clear explanations. This lack of transparency makes it tough to trust their decisions, especially when stakes are high.

Opaque AI affects sectors like healthcare, finance, and public safety where decisions impact lives and livelihoods directly. Imagine an AI denying a loan without a clear reason or a medical algorithm missing critical symptoms — the consequences can be serious.

Transparency isn’t just nice to have; it’s essential for users and developers to verify fairness and accuracy.

Building Transparent AI: Tools and Techniques

To pierce the black box, developers use various approaches:

- Explainable AI (XAI) methods that clarify how decisions are made

- Auditing frameworks to regularly evaluate AI performance and biases

- Open-source initiatives that promote community-driven review and improvements

Additionally, human-in-the-loop models keep people involved at key decision points. This blend ensures accountability and prevents blind trust in automation.

These tools help teams catch errors early, maintain ethical standards throughout an AI’s lifecycle, and mitigate risks by identifying and addressing potential harm, biases, or ethical issues in AI systems.

Human Oversight as a Pillar of Responsible AI

Automated doesn’t mean autonomous. Human oversight is critical to spot bias, identify unintended results, and intervene when outcomes don’t align with ethical norms. In situations where empathy and judgment are essential, human interaction remains irreplaceable, ensuring that decisions reflect genuine understanding and care.

Balancing AI’s speed with human judgment contributes to safer, more equitable systems — especially important in unpredictable or sensitive contexts.

Picture a real-time content moderation AI: a human reviewer can flag nuanced issues that a machine might miss, protecting users while benefiting from AI scale.

- Oversight fosters accountability by keeping humans “in charge”

- It creates a feedback loop for continuous improvement

Linking to Sub-Pages and Further Reading

For a deep dive into transparent AI techniques, check out How Transparent AI Systems Build Trust and Accountability.

Wondering how humans reshape ethics in AI workflows? Explore Why Human Oversight Is Revolutionizing Everyday AI Ethics.

Both offer practical frameworks and case studies that bring these concepts to life.

AI transparency isn’t an abstract ideal — it’s a practical necessity in 2025. By combining explainable methods, auditing, and human supervision, you create trustworthy AI that meets real-world demands and protects users. Today’s best AI strategies embrace openness to unlock innovation and accountability hand in hand.

Environmental Ethics: The Hidden Impact of AI Technologies

Energy Consumption and Resource Use in AI Development

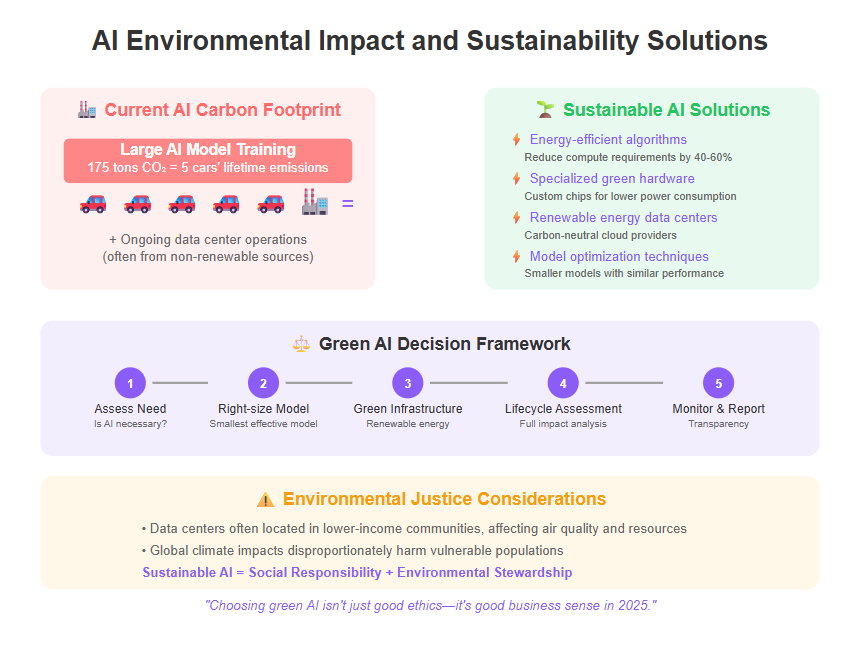

Training large AI models can generate up to 175 tons of CO₂, equivalent to the lifetime emissions of five average cars. Maintaining data centers powering AI systems also drives substantial energy use—often from nonrenewable sources. This raises pressing questions about the [LINK 1], especially as AI becomes embedded in daily life and business operations. Governments using AI for mass surveillance can lead to abuses of power, further complicating the ethical landscape of AI deployment. Responsible resource allocation is crucial in AI projects to ensure that resources are distributed effectively, minimizing harm and addressing risks such as bias and environmental impact. Should startups and SMBs scale AI solutions without considering environmental footprints?

This raises pressing questions about the ethical cost of AI’s resource hunger, especially as AI becomes embedded in daily life and business operations. Responsible resource allocation is crucial in AI projects to ensure that resources are distributed effectively, minimizing harm and addressing risks such as bias and environmental impact. Should startups and SMBs scale AI solutions without considering environmental footprints?

Ask yourself: Is the AI model’s benefit worth its carbon impact?

Sustainable AI Practices and Design Innovations

Emerging trends in green AI are turning this around. These include:

- Energy-efficient algorithms that reduce compute needs

- Specialized hardware designed to cut power consumption

- Cloud providers committing to carbon-neutral or renewable energy sourcing

- Development of new AI tools specifically designed for sustainability and ethical implementation

Ethical frameworks now encourage prioritizing projects that balance innovation with environmental responsibility. For example, choosing a smaller, well-optimized model over a massive, energy-guzzling one can deliver similar outcomes sustainably.

Innovators are also embracing life-cycle assessments to quantify AI’s full environmental impact—from development through deployment.

Considering the Broader Impact: From Local to Global

AI’s environmental footprint links closely with social justice issues:

- Resource-intensive AI disproportionately affects communities near data centers often located in lower-income areas.

- Global disparities mean countries contributing least to carbon emissions face the worst climate effects, increasing the harm caused to vulnerable communities by the environmental impact of AI.

Transparent reporting on energy use and regulatory pressure are essential levers to drive sustainable AI adoption on an industry-wide scale.

Picture this: a startup choosing energy-conscious AI isn’t just saving costs—they’re reducing harm to vulnerable communities globally.

This perspective reframes AI ethics as a holistic responsibility extending beyond code to climate and community welfare.

AI’s environmental impact is a critical piece of ethical AI use that startups and SMBs can’t afford to overlook.

Adopting energy-efficient design, transparent reporting, and mindful project selection enables you to build smarter solutions that respect our planet and people.

“Choosing green AI isn’t just good ethics—it’s good business sense in 2025.”

“Sustainable AI won’t slow innovation; it’ll power a better future for all.”

Decision Making and AI: Navigating Automated Choices

AI systems are increasingly tasked with making decisions that affect everything from job applications to criminal justice outcomes. While the use of AI in decision making can bring speed and efficiency, it also raises important ethical concerns. Automated choices made by AI can sometimes lead to unintended consequences, such as reinforcing existing biases or making errors that impact real people’s lives. However, AI is also helping create 'hybrid jobs' where it performs technical tasks, potentially freeing workers for more proactive responsibilities, which can lead to a more dynamic and innovative workforce.

For example, AI recruiting tools have come under scrutiny for perpetuating historical biases, unintentionally filtering out qualified candidates from underrepresented groups. In the realm of criminal justice, AI-powered risk assessment tools are used to predict the likelihood of reoffending, but these systems have faced criticism for amplifying racial bias and making decisions that may not always be fair or transparent. These real-life examples highlight the need for careful oversight and ethical consideration in the use of AI for decision making.

As AI systems become more embedded in critical areas of society, it’s essential to address these ethical concerns head-on. This means not only recognizing the potential for bias and discrimination but also actively working to prevent unintended consequences and ensure that the use of AI aligns with our values and expectations for fairness and justice.

How AI Systems Make Decisions

AI systems rely on complex algorithms and massive amounts of data to make decisions. These algorithms are designed to learn from patterns in the data, improving their performance over time. However, the quality of an AI model’s decision making is only as good as the data it’s trained on. If the training data is incomplete or biased, the resulting AI model can inherit and even amplify those flaws.

Take health care as an example: if an AI model is trained primarily on data from one demographic group, it may not perform as well for patients from other backgrounds, leading to disparities in diagnosis or treatment recommendations. This underscores the importance of using diverse, representative training data and regularly evaluating AI models for fairness and accuracy.

To build trustworthy AI systems, developers must prioritize transparency and explainability in their decision-making processes. This means making it clear how and why an AI system arrives at a particular outcome, so that users and stakeholders can understand, trust, and challenge those decisions when necessary.

Ethical Implications of Automated Decision-Making

The ethical implications of automated decision-making are profound. When AI systems make choices that affect human life—such as who gets a loan, how resources are allocated, or how autonomous vehicles respond in emergencies—the stakes are high. These decisions can have lasting impacts on individuals and communities, raising questions about fairness, accountability, and respect for human rights.

For instance, autonomous vehicles must be programmed to make split-second decisions that could prioritize the safety of one person over another, sparking debates about the value of human life and the ethical responsibilities of those who design these systems. Big tech companies, as the primary developers and deployers of AI technologies, have a duty to ensure that their AI systems operate transparently, treat users fairly, and uphold fundamental human rights.

Addressing these ethical concerns requires a commitment to ongoing evaluation, stakeholder engagement, and the development of clear guidelines that prioritize the well-being of all individuals affected by AI-driven decisions.

Balancing Human Judgment and Machine Intelligence

While AI systems excel at processing data and identifying patterns, they lack the nuanced understanding and empathy that human judgment brings to decision making. Striking the right balance between machine intelligence and human oversight is crucial for responsible AI development.

Human judgment provides essential context, ethical reasoning, and the ability to question or override automated decisions when necessary. However, it’s important to recognize that human decision-makers can also be influenced by their own biases and limitations. That’s why responsible AI development involves not only integrating ethical principles—such as transparency, fairness, and accountability—into AI systems, but also ensuring that human oversight is informed, vigilant, and open to continuous learning.

By combining the strengths of AI technologies with thoughtful human judgment, organizations can better anticipate potential risks, address ethical challenges, and create AI systems that serve the best interests of society. Ultimately, the goal is to develop and use AI in ways that reflect our shared values and promote positive outcomes for all.

Sector-Specific Ethical Challenges in AI Applications

Healthcare

AI is reshaping healthcare with diagnoses, personalized treatment, and patient data management, but ethical risks come into sharp focus here.

Medical AI is officially categorized as high-risk under the EU’s AI Act, requiring strict compliance that impacts developers and providers alike.

Balancing rapid innovation with patient safety and privacy demands ongoing vigilance from tech teams and clinicians.

Key healthcare concerns include:

- Risks of algorithmic bias affecting diagnosis accuracy

- Protecting sensitive patient data under evolving regulations

- Ensuring transparent decision-making in AI-assisted treatments

Picture this: an AI tool flags a potential illness faster than a doctor. The excitement is real, but without clear accountability and safeguards, trust can quickly erode.

Youth Engagement and Digital Citizenship

AI’s reach into youth platforms raises urgent questions about privacy, autonomy, and digital literacy.

Young users often face opaque data collection and AI-driven content moderation without meaningful consent. This can shape their online experience in unseen ways.

Ethical frameworks for youth-focused AI should:

- Prioritize age-appropriate privacy protections

- Ensure transparent data practices and clear consent protocols

- Support digital literacy programs that empower young users

Imagine a teenager’s feed curated by AI—what messages shape their worldview, and who decides what’s appropriate? Ethical guardrails here protect not just data but development.

Other Critical Sectors (Brief Overview)

AI ethics stretch across diverse fields, each with unique challenges:

- Finance: bias in loan approvals, risk prediction fairness

- Education: personalization vs. student privacy and equity, plus the ethical implications of generative ai in content creation and assessment

- Public Safety: predictive policing risks and transparency

- Employment: automation’s impact on job security and fairness

Tech giants and other tech giants play a significant role in shaping sector-specific AI ethics, often influencing standards and regulations through their dominance and self-regulation.

Tailored ethics guidelines and collaboration among stakeholders are essential to navigate these complexities effectively.

Adjusting AI responsibly across sectors ensures we’re not building tools that unintentionally harm vulnerable groups or deepen inequalities.

A clear takeaway: Ethical AI requires thoughtful customization by sector, balancing innovation with respect for human rights and societal impact.

Ethical AI isn’t one-size-fits-all—it demands sector-aware strategies that address distinct risks. From sensitive health data to youth privacy and workplace fairness, knowing where to focus guides better AI adoption.

Navigating the Regulatory Landscape of AI Ethics

Overview of Key International and Regional Frameworks

AI ethics regulation is rapidly evolving to keep pace with technology’s reach into daily life.

Three major frameworks are shaping the field in 2025:

- Framework Convention on Artificial Intelligence promotes human rights, democratic values, and guards against misinformation and discrimination. Adopted by the Council of Europe in 2024, it sets a global tone for AI ethics.

- The European Union’s AI Act enforces a risk-based approach, categorizing AI systems according to potential harm. High-risk applications—like healthcare and public safety—face strict transparency and human oversight requirements. The European Union is recognized as a leader in establishing and enforcing AI regulatory standards, proactively setting benchmarks for ethical AI use.

- In the United States, recent legislation targets AI-generated deepfakes (e.g., the “TAKE IT DOWN Act”) and expands AI innovation resources through bills like the “CREATE AI Act.” These balance creativity with accountability.

Picture this: A startup designing AI-powered medical software must already navigate the EU AI Act’s high-risk classification, meeting strict compliance to keep patients’ rights and safety front and center.

How Regulations Shape Ethical AI Practices for SMBs and Startups

For SMBs and startups, compliance is more than legal obligation—it’s a strategic asset.

Key ways regulations influence AI product development:

- Drive proactive ethical risk management, encouraging teams to integrate fairness, transparency, and privacy early in design phases.

- Foster trust with customers and partners by demonstrating adherence to current and anticipated standards.

- Create a marketplace advantage for those who engage early and deeply with evolving laws, avoiding costly last-minute pivots.

Startups often underestimate the complexity of cross-border AI laws. Investing time up front to understand frameworks can prevent headaches later—and even speed up time-to-market by smoothing regulatory approvals.

Emerging Trends and Anticipated Developments

AI regulation in 2025 is both converging and diverging globally, creating a nuanced landscape.

Look out for:

- Increased global alignment on baseline human rights protections paired with localized rules reflecting cultural and political differences.

- Growing pressure for transparent environmental reporting from AI providers, linking ethics with sustainability.

- Novel compliance challenges for SMBs working across regions, requiring flexible strategies that balance innovation with responsible behavior.

Think of it as navigating a complex web: startups must be ready to adjust ethical practices mid-flight as new laws emerge, but those who adapt fastest gain lasting credibility.

Understanding and engaging with this dynamic regulatory landscape is essential for anyone building or deploying AI today.

Early, thoughtful alignment with AI ethics laws isn’t just good practice—it’s your ticket to trust, competitive edge, and sustainable growth.

Practical Strategies to Foster Ethical AI Use at Home and Work

Promoting Ethical AI Adoption in Daily Life and Business

Adopting ethical AI starts with a few clear, practical moves anyone can make today.

Try these five steps to keep AI use fair, transparent, and responsible:

- Educate yourself and your team on AI’s ethical principles to recognize risks and benefits

- Commit to transparency by openly sharing when and how AI systems affect decisions

- Maintain continuous oversight—regularly review AI outputs for bias or errors

- Foster inclusive dialogue among diverse stakeholders to surface different perspectives

- Practice environmental mindfulness by choosing AI tools designed to minimize energy consumption

Picture this: your startup’s chatbot is not just fast but also respects user privacy and reduces carbon load by using an energy-efficient model.

Cultivating an Ethical Culture Around AI Deployment

Creating an ethical culture means building AI accountability into your daily routine and team mindset.

Focus on:

- Encouraging ownership: empower teams to flag issues and take responsibility for AI decisions

- Using auditing tools and frameworks to regularly assess AI’s social and environmental impact

- Involving stakeholders—from customers to developers—in ethical checks before rollout

Imagine quarterly ethical audits becoming as normal as sprint reviews, ensuring your AI products don’t just work but do right.

Linking to Sub-Page: 5 Strategic Steps to Promote Ethical AI Usage at Home and Work

For a deep dive into actionable tactics, head to our dedicated guide that breaks down these strategies into easy-to-implement steps designed for busy SMBs and startups eager to lead responsibly.

Ethical AI is less about avoiding risk and more about embracing responsibility boldly and early—whether you’re a solopreneur or scaling fast.

“Ethics in AI isn’t a checkbox; it’s a culture you build one step at a time.”

“Transparency and ongoing oversight turn AI from a black box into a trusted team member.”

Taking concrete steps now builds trust, reduces costly missteps, and sets the stage for AI that’s not only smart but socially smart.

The Top Ethical Dilemmas Facing Everyday AI in 2025

Identifying Critical Ethical Questions

Every AI tool you use today carries weighty ethical questions behind the scenes. The top dilemmas shaping AI’s impact in 2025 include:

- Algorithmic bias that can unfairly impact marginalized groups, like facial recognition errors affecting darker skin tones

- Privacy trade-offs between personalization and intrusive data collection

- Accountability gaps when AI decisions are opaque or “black box” systems hide who’s responsible

- The spread of misinformation driven by AI-generated content and deepfakes

- Environmental costs with AI training consuming up to hundreds of megawatt-hours of electricity per model

- Automation versus human jobs raising concerns over workforce displacement

- Complexities around consent, especially when users don’t fully understand AI’s data use

Picture this: your voice assistant misinterprets commands due to accent bias, or an AI in hiring tips the scale unfairly without human oversight. These aren’t just theoretical; they're happening right now.

Navigating Trade-offs and Moral Ambiguities

Here’s the thing: definitive answers to these dilemmas rarely exist.

Ethical AI requires context-aware decision-making—what’s right in healthcare may differ in marketing or education.

The goal isn’t to halt innovation but to anticipate harms, build safeguards, and stay transparent.

That means businesses and startups should:

Embed human oversight alongside automated systems

Prioritize inclusive design to reduce bias

Maintain clear user communication on data usage

Think of it like speed limits on a highway—not meant to stop you from driving fast, but to keep everyone safe.

Linking to Sub-Page: 7 Critical Ethical Dilemmas AI Poses in Daily Life

Curious for a deep dive? Our dedicated guide unpacks these dilemmas with concrete examples and practical steps.

Stay ahead of evolving challenges—because ethical AI isn’t just about fixing problems. It’s about designing a better future that respects people, privacy, and the planet.

Ethical dilemmas in AI touch everything from fairness to planetary health. Grabbing these challenges by their horns lets you build smarter, kinder products that users and regulators trust. Choosing ethics as a compass means you’re not just keeping up—you’re setting the pace for what AI can and should be in everyday life.

Conclusion

Ethical AI isn’t just an abstract ideal—it’s your competitive edge and trust-builder in today’s fast-moving digital world. By embedding fairness, transparency, privacy, and sustainability into your AI strategies, you’re shaping tools that don’t just work smart but work right. This approach fuels innovation that lasts, earning loyalty from users, partners, and regulators alike.

Here’s how to turn these ethics into action right now:

- Champion transparency by clearly communicating how your AI systems make decisions.

- Embed continuous oversight—regularly audit AI outcomes to catch bias and errors early.

- Prioritize inclusive design by involving diverse voices in development to unlock fairness and creativity.

- Maintain data respect through transparent, minimal data use and user consent practices.

- Opt for sustainable AI by choosing energy-efficient models and mindful resource management.

Taking these steps empowers you to lead responsibly and avoid costly missteps that could damage trust or invite regulatory scrutiny.

Start by assessing your current AI tools and workflows: where can you add clearer explanations, introduce bias checks, or refine data handling? Engage your team in open conversations about ethics and assign accountability to keep momentum alive. Consider adopting auditing tools or partnering with ethical AI experts to deepen your impact. As highlighted by a senior fellow in AI ethics, establishing robust ethical guidelines is essential for building trustworthy and responsible AI systems.

Ethical AI isn’t a barrier to rapid innovation—it’s the fuel that propels it forward with integrity. When you treat AI as a trusted teammate, not a wildcard, you unlock solutions that are not only powerful but principled.

Remember: building AI that respects people, privacy, and the planet transforms challenges into opportunities—and sets you apart as a true innovator in 2025 and beyond.